Voice control was the stuff of science fiction throughout the 20th Century. But in the last two decades, voice control has entered the mainstream. Voice assistants like Siri and Alexa are embedded in home devices, headphones, and even cars.

But, how did we get to this point? What is the connection with machine learning at the network edge? And how can you create your own voice-activated edge device? This article, part of a series on machine learning at the edge, answers all these questions and more.

Speech recognition in the real world

Voice control always fascinated futurologists and Sci-Fi authors alike. Back when it was first proposed, it must have seemed like a distant dream. But over the past decade, voice control has become routine and mainstream. This is thanks to a combination of factors.

Advances in speech recognition and natural language processing, the availability of powerful computers for machine learning, and the growth in high-power edge-devices. Nowadays, we can see examples of voice control all around us.

Virtual voice assistants

Virtual assistants, such as Alexa, Siri and Google Assistant, have driven a huge take-up in voice control systems. In essence, a virtual assistant listens to your instructions and acts on these. For instance, you can ask it to play music, to tell you the weather, or to navigate you to your destination.

In general, these virtual assistants all work similarly. They require a suitable edge device with network connectivity and a powerful backend. Typically, the edge device may be a smartphone, a smart speaker, or, increasingly, some other device like a TV or pair of headphones.

The edge device just tries to detect a “wake word”. It sends the voice message to the backend for processing and then handles the result that gets returned. This process clearly depends on a good Internet connection. However, recent improvements in edge technology mean more and more functionality can be kept on the device.

Cars

Driver distraction is one of the biggest causes of deaths and injuries on our roads. As a result, car manufacturers have invested billions into driver aids designed to help reduce distractions. One of the most powerful is adding voice control to cars. This allows the driver to interact with the infotainment system in a totally hands-free manner.

Unlike the voice assistants above, such systems cannot rely on having network connectivity. As a result, all the voice recognition and processing must be done within the edge device.

The search for voice control

As mentioned above, voice control grew out of advances in speech recognition and NLP along with increased computing power. Creating functional voice control required computer scientists to solve a number of problems.

Firstly, how do you record a person speaking and convert this into text? Next, how do you parse that text to extract the meaning? Finally, how do you work out the correct response?

These problems have interested computer scientists since long before the invention of the modern computer. Indeed, the idea of teaching computers to understand humans dates back to the earliest days of computing. Each of these problems required a different solution

Speech recognition

In the 1990s, more and more people got access to computers at work and at home. Few of these people were able to type though. So, a lot of effort was invested in creating systems that would allow a human to dictate to a computer.

This process of converting your voice into words on the screen is known as speech to text or speech recognition.

The earliest speech recognition systems were created in the 1950s. They were able to distinguish single spoken digits. However, it took until the late 1960s until speech recognition became a serious research area. By the 1970s, systems were being developed that could recognise longer words and even phrases.

The real breakthrough came with the application of hidden Markov models to the problem. By 1987, this resulted in the Katz back-off model, which allowed practical speech recognition on computers or specialised processors.

Throughout the next decade, systems got better and better at speech recognition across multiple languages. By the mid-1990s, the technology had advanced sufficiently for companies to start selling commercial speech recognition systems.

Early speech recognition often relied on training the system to recognise a single voice. This was achieved by asking the user to read out a specific passage of text. This text included all the possible phonemes, parts of speech, etc. to allow the system to learn that user’s voice.

More recently, we have seen speech recognition applying machine learning approaches to learn to understand different accents. This avoids the classic problem where the computer is unable to understand someone with a strong accent. Modern systems are now able to understand multiple regional and national accents.

Natural Language Processing

Of course, just being able to write down what a person says is not enough. Voice control also requires the computer to understand what is said. This is a much harder problem known as natural language processing or NLP for short. Here, the computer must learn what you actually meant. If you have learned a foreign language, you will know how hard this can be.

The problem is, human language depends on all sorts of factors. Context, emotion, knowledge, and idiom all change the meaning of a sentence. Often, there are many ways to say the same thing, even for something as simple as making a phone call. “I’m phoning my parents”, “I’m calling home”, “I must give my dad a call”, etc. NLP is the process of teaching a computer about the structure and meaning of human language.

For decades, NLP was a theoretical field. Computers simply weren’t powerful enough to solve the problem. Nowadays, computers are getting better and better at it. This is largely down to improvements in machine learning, especially deep learning. The latest approaches combine several different machine learning technologies.

- Supervised learning from large corpora of recorded and annotated speech.

- Reinforcement learning to improve performance based on human feedback.

- Transfer learning to allow data scientists to finetune existing models, such as BERT, ELMO, or GPT-2.

The resulting systems can understand more and more human language.

Decision making

The final requirement for a voice control system is deciding how to respond to the user. In other words, what action should the system actually take? There are many approaches for this. In simple systems, you could use a rules engine. That is simply a list of actions to take given a set of input conditions.

Many voice assistants use a variation of this. For instance, Amazon Alexa allows you to write your own Skills. Here, you are able to specify what you expect a user to say and provide the appropriate response.

However, for voice assistants the range of possible instructions is completely open-ended. So, increasingly reinforcement learning and unsupervised learning are used to allow the system to react to the instructions it hears.

So, now you understand a bit about how voice recognition and voice control work. But what about applying this in practice? For the rest of this article, I will explain how you can actually implement a simple voice controller.

A practical voice control implementation

This example is based on a simple voice controller model that can recognise the words ‘yes’ and ‘no’. The model is created in TensorFlow and ported to TensorFlow Lite, allowing it to run in low-power edge devices.

Hardware requirements

We are going to use an Infineon XMC4700 Relax development kit for the implementation. This kit is based on an ARM® Cortex®-M4 core running at 144MHz, with 325KB RAM and 2MB of flash memory. The board provides an Arduino shield header, making it easy to add peripheral devices once you have soldered headers into place.

Obviously, since this is a voice controller, the first requirement is to add a microphone to the board. You can choose pretty much any Arduino shield with a microphone. I am going with the Infineon S2GO MEMSMIC IM69D Shield2Go, which provides 2 MEMS microphones on an Arduino Uno shield. Before you can use the shield, you will need to solder on headers.

Software requirements

The software for this project can be found on Mouser’s GitHub. Clone the repository and open the Software folder. Here, you will find all the files you need for the project. This includes:

- The sample data for the commands ‘yes’ and ‘no’ (based on fast fourier transforms of captured speech samples)

- The actual model for recognising the commands

- A responder to handle the resulting decision

- A main file to link all the pieces.

In addition, you will need a suitable development toolchain, such as the Arduino IDE or Infineon DAVE IDE. I’m going with the Arduino IDE. This means I need to add the correct Infineon XMC Library.

- Go to Preferences and find the entry for “Additional Boards Manager URLs”. Paste https://github.com/Infineon/XMC-for-Arduino/releases/latest/download/package_infineon_index.json and click OK.

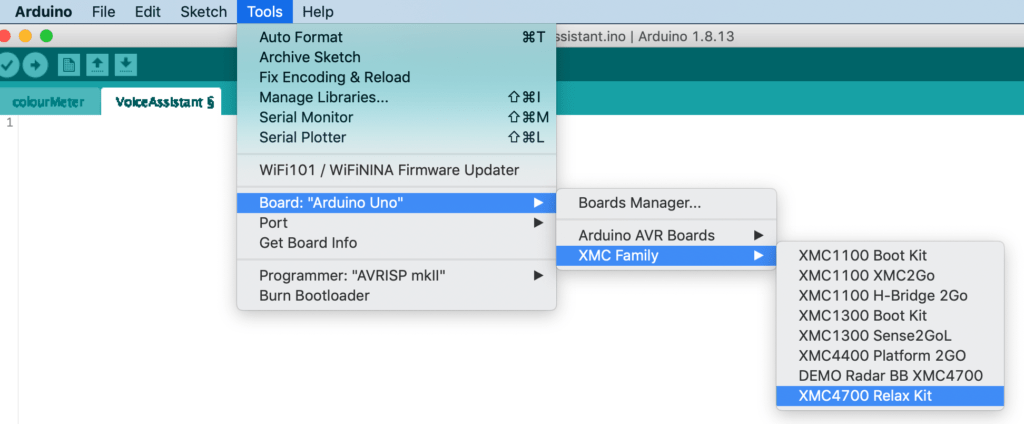

- Click Tools > Board: “Arduino Uno” > Boards Manager. NB by default you will see ‘Board: “Arduino Uno”, but if you already used the IDE, you will see the latest board family you used.

- Enter XMC in the search box and press Enter.

- You should see an entry for “Infineon’s XMC Microcontroller”. Click Install.

- Once the install is completed, click Close.

Now you are able to set the correct board in the IDE. To do this, go to Tools > Board: “Arduino Uno” > XMC Family > XMC4700 Relax Kit.

The last thing you need is the SEGGER J-Link software. This will allow you to access the onboard debugger and programmer on the XMC4700 Relax Kit. The software can be found here.

With all that done, you are ready to actually build the project.

Building the project

Fortunately, the software you cloned from the Mouser GitHub repository has been written specifically for this board. This means compiling the software is pretty simple. First, connect the board to your computer using the debug Micro-USB port near the RJ45 jack. Go to Tools > Port and make sure the correct port is selected.

Note: You may need to identify which COM port the board is connected to. You can do this in Windows using the Device Manager. Or in MacOS type ls /dev/tty.* in the terminal and look for the correct port in the list.

Second, you need to rename the main-functions.cc file to voice-control.ino. You also need to rename the Software folder to voice-control. This will allow the Arduino IDE to recognise this as a project.

Third, open the renamed voice-control project in the Arduino IDE. Go to File > Open and browse to the correct folder. Select voice-control.ino and click Open.

The final step is compiling and uploading the software. This is really simple: Just go to Sketch > Upload and (all being well) the software will be compiled and flashed to your board.

Testing voice control

Make sure the microphone shield is connected to the XC4700 board. Plug the board into a USB power source (or your computer). Allow the board to fully power up. Now say the word ‘yes’ into the microphone. LED1 on the board should light up for 3s. Then say the word ‘no’. LED2 should light for 3s this time. If nothing is heard, or the system can’t recognise what is said, no LEDs will light up. If you want to use this as a real controller, you can easily modify command-responder.cc.

Going beyond simple voice recognition

All the practical examples we looked at so far in this series have been relatively simple. In the next article, I will look at more powerful hardware platforms that take edge ML to the next level. These platforms are specifically designed for running AI applications at the edge. They include:

- Google’s Coral TPU, a platform and ecosystem for creating privacy-preserving AI.

- Intel’s NCS2 or Neural Compute Stick, a plug-and-play USB stick specifically designed to bring deep learning to the edge.

- ST’s STM32 Cube.AI, a package that brings artificial neural networks to ST’s Cortex-based microcontrollers.

We will see how these platforms go beyond simple pre-trained ML models, enabling deep learning and unsupervised learning at the edge.