AI paradoxes are a hot topic. The use of Artificial Intelligence (AI) is rapidly growing and evolving – but with that comes an ever-increasing importance placed on ethical considerations. As AI becomes more intelligent, the consequences of errors become greater and often have a direct impact on safety. The need for well-defined ethical principles to guide the development and application of AI has never been greater.

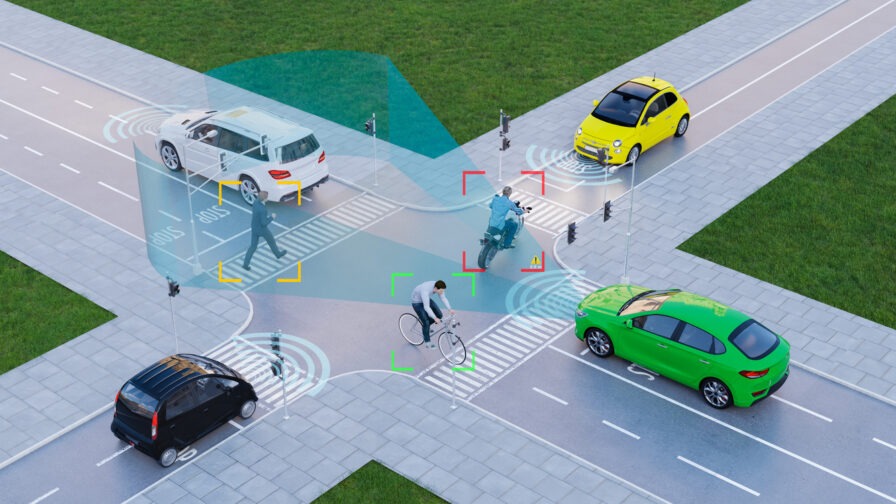

One area where the ethical implications of AI have gained particular attention is self-driving cars, or autonomous vehicles. Autonomous vehicles are programmed to make decisions based on algorithms that are designed by humans. This raises some difficult questions: should these cars be built to prioritize human life over all other considerations? Should they abide by traffic laws? What if one algorithm programmer’s ethics come in conflict with another’s?

Mauro Bennici, Ethics expert at SME Digitial Alliance, has launched an exciting series of interviews, titled “Devs Meet Ethics” in collaboration with Codemotion, exploring the implications and complexities surrounding Artificial Intelligence technology. In his first episode with Head of Digital Gianluca Desiderato, Mauro dives deep into difficult ethical questions which inevitably lead to many more inquiries – emphasizing that in this space answers are rarely black or white.

Self-driving car Paradox

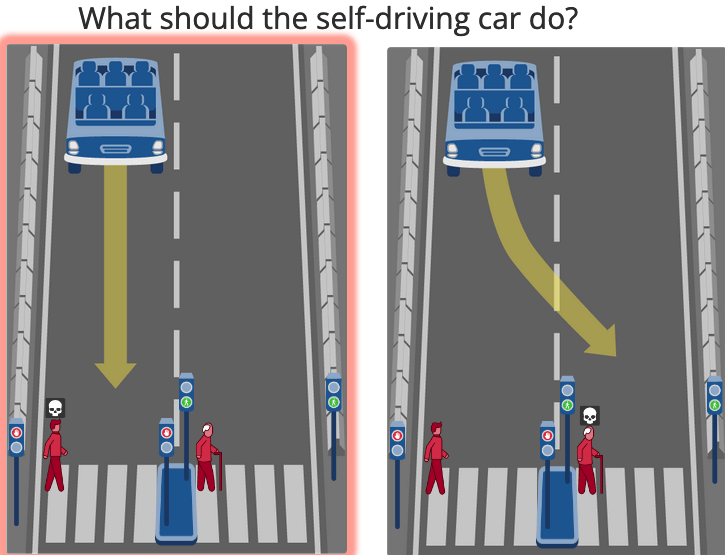

One of the most important ethical considerations for developers working on self-driving vehicles is known as “the self-driving vehicle paradox.”

This paradox states that it might be better for a self-driving vehicle to sacrifice its own passengers if doing so will save more lives overall. For example, if a car is driving down a crowded street and it needs to make a split-second decision between hitting two pedestrians crossing the street or swerving into a wall, which should it do? Should it prioritize saving its passengers or saving more people overall?

This is an incredibly difficult question to answer and one that must be considered by developers when creating AI systems for self-driving vehicles. It’s not enough to simply create a system that prioritizes safety; developers must consider all possible scenarios and be able to anticipate how their system would react in each situation. This requires a deep understanding not just of computer science but also of philosophy and ethics.

This paradox has been discussed extensively in philosophy and ethical literature, with no clear consensus being reached. Artificial Intelligence (AI) can only be as moral as its programming allows it to be; human input is required to ensure that ethical principles are built into AI systems from the beginning. “Who should the machine save? The driver or the pedestrian? Many factors come into play, the culture, the ideology, and the Ethics of the developer. Aspects such as the sensibility, and experience of the person also weigh. This makes it very difficult to arrive at one answer”, analyzes Desiderato during the interview.”

New Laws and Open Source

The legal implications of autonomous vehicles also need to be considered. Legislators around the world are scrambling to keep up with the rapid advancements in this field, and laws governing self-driving cars remain largely incomplete or vague. In some countries, legislation has been passed making it illegal for self-driving cars to break traffic laws; in others, the legal status of these vehicles is still unclear.

In this regard, Bennici raises a pertinent question: how can we ensure compliance across Europe with the ever-increasing regulations that come in one form or another for vehicles? Implementing an effective law at scale is more complex. What strategies should be employed moving forward to guarantee safe travel between countries regardless of local laws? Should car manufacturers use open source to ensure compliance with these laws when they are implemented?

Carhacking

Another important ethical consideration for developers working on AI systems for cars is carhacking. Carhacking occurs when someone gains unauthorized access to a car’s electronic control systems in order to take control of it remotely or manipulate its behavior in some way.

“Carhacking is not a simple issue. It’s more than just switching the radio station of who’s driving. It is also potentially dangerous for the passengers”, observes Desiderato.

This can have serious implications for both safety and security, as hackers could potentially use this access to cause accidents or otherwise interfere with traffic flow. In order to prevent this from happening, developers need to ensure that their systems are secure from external threats. This means designing secure networks, encrypting data transmission, and using authentication measures like passwords or biometrics.

Accidents and morals in the era of AI

The serious matter of who is to blame in an accident involving a car driven by artificial intelligence is a grey area. On the one hand, the owner has a sense of responsibility for any technological malfunctions and it could be argued that they need to take into consideration the serious risk that this technology poses. On the other hand, however, there may also be serious technical issues on behalf of the manufacturer that are beyond their control and so would need to be taken into account when considering liability. It’s clear that exploring this issue further needs to be done in order to figure out where exactly the blame should lie.

“I don’t have a strong position on this“, says Desiderato. “There are still few cases of accidents involving AI-driven cars so it’s difficult to understand how this is going to evolve in the future“.

“There’s also a case in which an autonomous car was enabled to blind drivers in California. What would happen if an accident took place in this scenario? In this case, the only way to make it work was because the company took responsibility beforehand in case of an accident“, explains Bennici.

Ultimately, ethical decision-making is every developer’s own personal choice in the face of moral dilemmas. Developers are not robots; most developers will have their own sense of ethics and standards that they personally abide by when making decisions on what tech to work on. If a developer disagrees with the purpose of the AI tool that their company has asked them to program, then it is important to communicate this before participating in unethical programming. In this sense, Bennici explains that there are new movements among AI developers that promote conscientious objection in programming, and how it can become an important tool in defending morality and human values against technologies deemed unethical when there are life-or-death consequences at stake.