Building an eCommerce store is far from easy. Lots of issues inevitably arise, relating to both the back- and the front-end. The larger the eCommerce operation, the greater the number and extent of the issues that need to be solved.

AS Watson is one of the leading companies in the large-scale eCommerce stores market. This international company is responsible for the development of numerous web platforms capable of managing vast numbers of simultaneous users as they buy products online.

Recently, we asked AS Watson about some of the key areas that need to be taken into consideration when building large eCommerce stores, when the goal is to provide the best possible customer experience to the final users.

Their response? Latency, scalability, and reliability. This article explores how such features are usually optimized by AS Watson, looking at a couple of use cases in which microservices played a crucial role.

Latency, Scalability and Reliability

Before starting discussing the impact of microservices in improving latency, scalability and reliability, it is worth clarifying what these words mean in this context.

Latency

Latency represents the time delay before receiving a response from the eCommerce server. There are a lot of ways to reduce latency, and these relate to the back-end as well as the front-end.

At the back-end, reducing latency usually involves optimizing the algorithms or those parts of the code that are heavy to process. Of course, this optimization process requires knowledge of the server-side programming language being used, such as Java or PHP, to name just a few of many possibilities.

In terms of the front-end, optimization can relate to the specific rendering strategies implemented by the browsers, and can require knowledge of CSS, HTML, JavaScript and the many front-end frameworks currently in use, such as React, Vue or Angular. T

The goal here is to reduce the loading time the browser requires to display the webpage to the final user. This also has a positive impact on user experience.

Reducing latency is crucial to improving customer experience by decreasing loading times.

Scalability

Wikipedia defines scalability as “the property of a system to handle a growing amount of work by adding resources to the system”. In a large eCommerce store, it is crucial that the underlying platform can scale.

Optimizing this fundamental aspect means running several stress tests and estimating a plausible traffic load. While the running of a test can be entrusted to back-end developers and system administrators, achieving a realistic estimation can be quite tricky, and often depends on several variables.

This makes the establishment of good communication among the business departments and the developers very important.

There are several tools that developers can use to generate a fake, but credible, amount of traffic. For instance, AS Watson often relies on Gatling, a tool specifically designed for load testing.

Analyzing the results of your stress tests is crucial to the identification of possible bottlenecks, both within the code base and in terms of infrastructure. This can result in additional optimization of the back-end code, as well as increasing the computational resources available to the servers.

Reliability

System reliability is closely related to scalability. Here, the term refers to the infrastructure and to ensuring an optimal level of operational performance for a sufficiently long period.

The relation between reliability and scalability should be clear. Scalability is the ability to change the system so that it can provide responses reliably. Following the same line, a good level of reliability is a consequence of good system scalability.

Optimizing reliability is heavily reliant on the aforementioned stress and load tests. The analysis of the results of those tests is used to establish the size of the infrastructure, which translates into achieving the desired level of reliability.

How microservices can improve scalability

In the last decade, the role of microservices has become central. Old-fashioned monolithic back-end set-ups are gradually being ported to something more oriented to microservices, and there are many reasons for this to be the case.

One of the key characteristics of microservices is the ability to scale. By definition extremely modular, any microservice can be replicated in various nodes to provide high availability while also being able to handle high traffic loads.

AS Watson introduced microservices to improve their original monolithic architecture, intending to manage high traffic loads well. In particular, the company separated the Stock module, transforming this into an almost-independent microservice, able to communicate with the rest of the architecture.

Stock management is a common challenge for eCommerce stores. Customers do not like receiving messages like “this product is not available” during checkout, and certainly not after payment. This makes managing product availability properly very important, even – and perhaps especially – when there is a high number of concurrent requests. Achieving this goal is possible if you can provide a stock service that is both fast and fair.

The traditional approach involves locking the database record to build the customer queue. However, this might end up causing a locked thread while putting the database under a lot of pressure. The result of this is a bad customer experience.

Microservices can help in reducing the pressure on databases

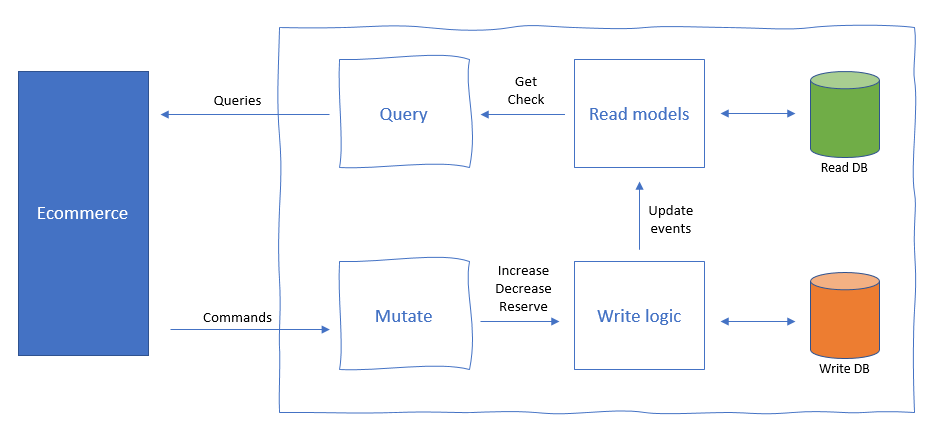

By introducing a microservices-based approach, AS Watson could switch to a new stock service, placing high-load management at the core of the project. Instead of locking the database, and placing customers into a fixed queue, the system can now register an event and let customers continue their purchases.

The stock service can also be deployed into multiple replicated nodes to support a higher number of asynchronous requests. This offers a more stable and robust service, thereby improving the overall customer experience.

The implementation of the new Stock service was inspired by the CQRS (Command Query Responsibility Segregation) pattern, which allows read and write operations to be carried out independently.

How microservices can improve reliability

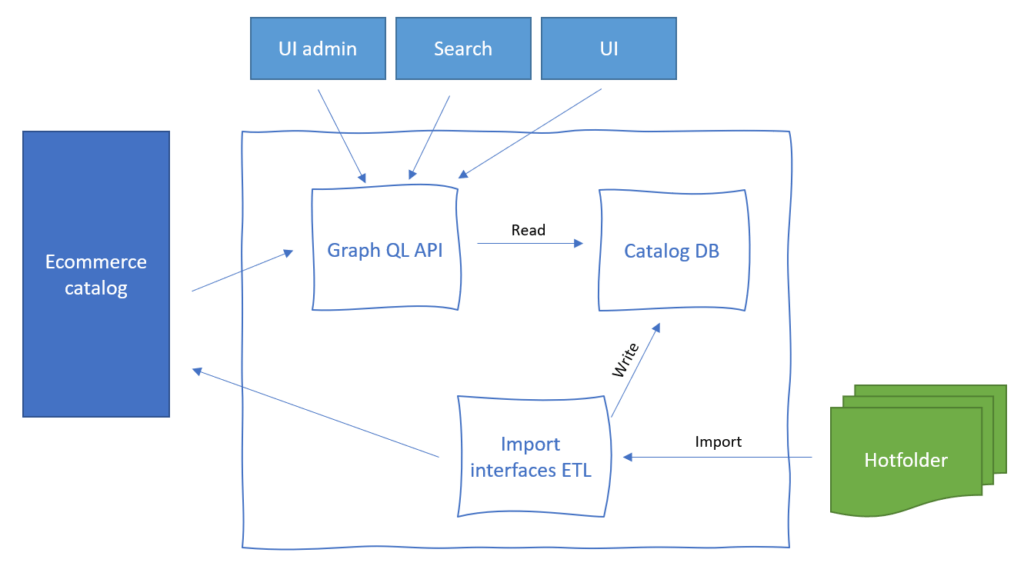

Another module AS Watson has moved outside the original monolithic architecture is the Catalog, which handles data management standardisation by interfacing with the internal data structures provided by AS Watson’s customers – the vendors on the relevant eCommerce platform.

Each vendor has its own data organization, so it is crucial to use a service that can make all data compliant with a common abstraction, which can then be used by the rest of the architecture.

Product management is quite a complex topic in the retail world. The process comprises dozens of heterogeneous bricks, such as product information, SEO-related data, promotions, categorization, in-store availability, etc.

The data comes from multiple sources and should be processed in the proper order to respect several predefined formats. Such complexity within a monolithic application implies that long and heavy testing cycles are necessary.

Moreover, having complex processing jobs inside a rigid application has an impact on computational resources: RAM and CPU always need to be reserved even when there is basically nothing to do.

For these reasons, AS Watson decided to move the Catalog module outside the monolithic architecture as a new microservice. The goal was threefold:

- Manage computational resources effectively. Each microservice is able to scale independently so that each can utilise specific resources to parse the incoming data;

- Simplify the delivery process, as well as the whole testing cycle;

- Decrease the pressure on the core business logic, implemented in the monolithic application and databases while these are in use.

What about latency?

It is worth discussing how microservices can affect latency. Generally speaking, moving a module outside the original application triggers an additional communication mechanism that affects response times. However, such mechanisms – if properly designed – can usually be tuned so that the additional overhead is almost negligible.

Nonetheless, this negligible cost in terms of latency offers many advantages to the architecture. Microservices’ modularity has already been discussed in terms of its positive impact on high availability and scalability, but maintainability hasn’t yet been mentioned.

Maintainability is also improved by microservices, allowing teams to focus on a specific feature of the entire system even if they have a very limited knowledge of the rest of the application.

All things considered, microservices represent a concrete way to improve an existing architecture, making it more flexible and manageable from a developer’s perspective. Of course, full transition to a microservices-only model is not always possible, but even a hybrid solution like that currently used by AS Watson (the company is exploring other areas and functionalities related to microservices transition) can offer significant advantages, making a system more robust and adaptable.