Key Takeaways:

- You can allow teams to work in parallel by designing gRPC APIs before starting to code.

- When teams work in parallel, you significantly decrease the time-to-market of your products.

- A sample workflow has a few key stages.

- The teams start by designing the gRPC API

- Then, producer and consumer teams can work in parallel on their microservices.

- The consumer team can use mocks to simulate the backend producer service.

- Communicating feedback about the API specification during the development phase is essential.

- Once the microservices are ready, they can test them together and release them to production.

- You can start by onboarding just one API to this new workflow; you don’t have to migrate your whole company, to begin with

- Working in parallel is excellent for team morale as there is less hard-deadline pressure

- You can estimate the return on investment by using a simple spreadsheet.

See “Figure 1: Design-first gRPC service development and testing workflow” for a high-level overview of the workflow described in this article.

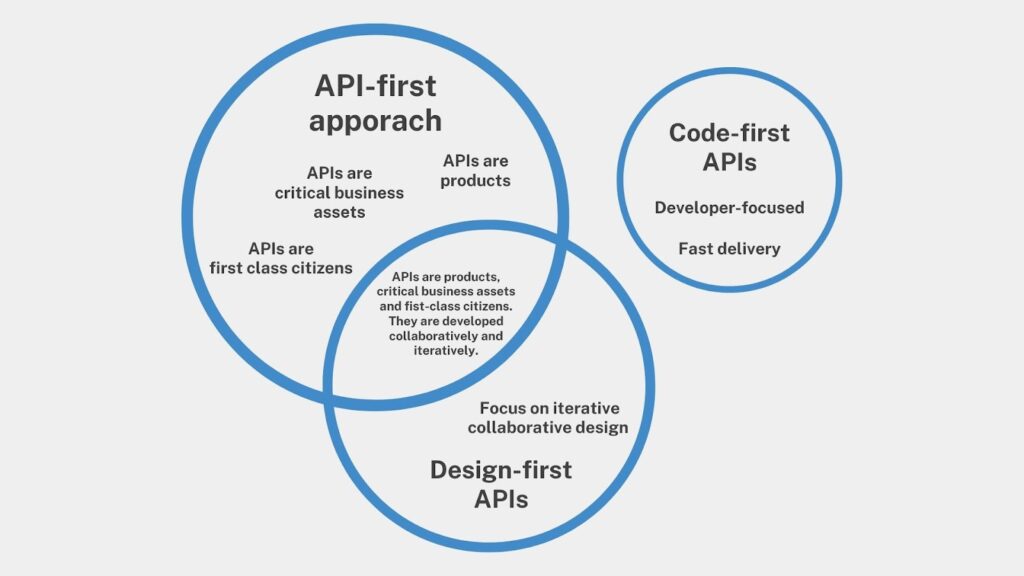

What is API-first, design-first and code-first?

What does it mean to follow the API-first approach? It means that APIs are first-class citizens that can be products the company offers its customers; they are critical business assets.

What is the API design-first approach? It means working closely with the client, product, architecture, testing and development teams to design the services available in the API and the data format before actually starting to code them.

It is in contrast to code-first APIs, where you would dive straight into coding and creating the API without any upfront specifications. The API schema can be defined in a format like OpenAPI for HTTP APIs, Protobuf for gRPC or AsyncAPI for RabbitMQ, Kafka and other asynchronous systems.

The API design-first approach is proven to parallelise teamwork and get products to customers much faster, which is still relevant. Design-first fits into the API-first approach adopted by companies that treat APIs as first-class citizens critical to their business success.

This article is for you if you have never used design-first APIs to parallelise teamwork. I share a sample workflow for adopting it in your teams, including how developers from different teams working with different architectures and tech stacks can work together.

I share my experience learned from working with large enterprises (i.e. global Spanish e-commerce and a global UK media company) and smaller companies (i.e. a US insurtech startup and a UK challenger bank).

I use gRPC and Protobuf as the technologies in the examples in this article. There are other great articles discussing the popular HTTP and OpenAPI stack.

What is gRPC?

gRPC is a Remote Procedure Call (RPC) framework used by companies to communicate between systems, mobile applications and microservices that require high performance. It has been steadily gaining popularity in the past five years.

Typically companies use gRPC along with Protobuf to encode data. “Figure 3: order-service.proto” shows a Protobuf file that defines the format of the messages. It describes an “Order” service where you can send “Item” messages to order items with a given “SKU”.

message Item {

int32 sku = 1;

int32 quantity = 2;

}

enum Status {

SUCCESS = 0;

ERROR = 1;

}

message OrderStatus {

Status status = 1;

string message = 2;

}

service Order {

rpc Purchase(Item) returns (OrderStatus) {}

Design-first gRPC service development and testing workflow

It is advisable to work closely with your product and architecture teams to define your gRPC services before you start creating them. It will help to get your team off the critical path and work in parallel.

“Figure 1: Design-first gRPC service development and testing workflow” shows a high-level overview of a workflow you can use to be design-first.

To start, the teams get together and define gRPC APIs. Once they confirm what the API looks like, the producer and consumer teams can work in parallel to meet the defined API specs.

The consumer team then starts by creating gRPC service API mocks, so they can work on their consumer microservice without waiting for the producer team to have their microservice up and running. Once they create the mocks, they can proceed to code the consumer microservice, which for now, will connect to the gRPC service mocks, not to the real producer microservice.

In the meantime, the producer team can work on their producer microservice.

When the producer and consumer teams finish developing their microservices, they can test their work without mocks. They will test the producer and consumer microservice and if they work together.

Once the testing is complete, the teams can release the microservices to production.

For simplicity, I have skipped in this workflow security testing, performance and load testing, penetration testing and other types of ensuring the quality of your microservices. This sample workflow demonstrates how to parallelise work by defining APIs upfront. I do not focus on how to test microservices. I have also skipped API maintenance and other workflows. This workflow also assumes working with over-the-wire gRPC mocks, which are the easiest to get started with in my experience. There are other possible workflows, for example, in-process mocking of classes, relying on the generated gRPC stub connectors.

Now that we have covered the workflow’s main stages, let’s dig into the details of each one by one.

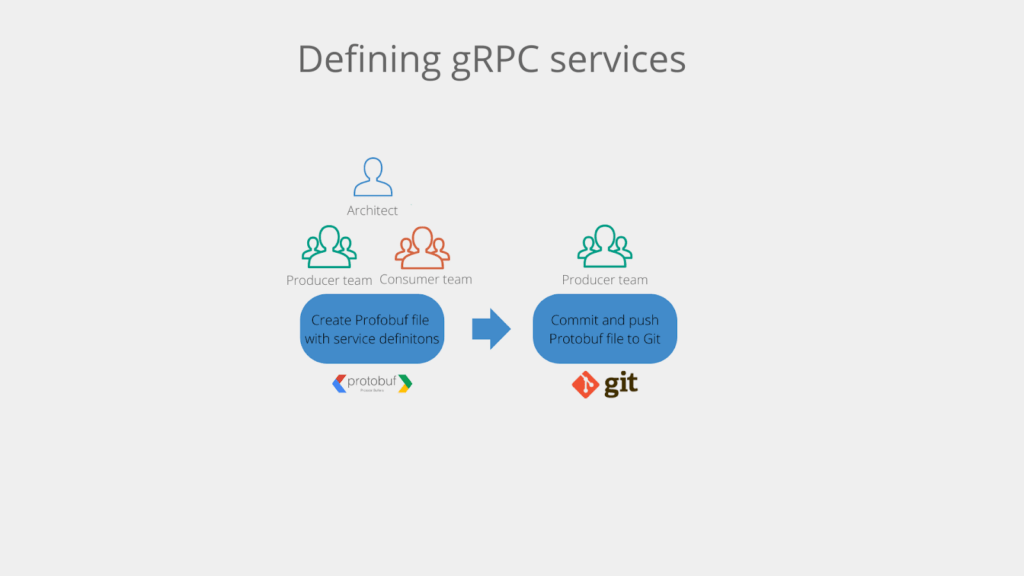

Defining gRPC services

To define the gRPC API, all the stakeholders come together and discuss the purpose of the services, typical use cases and data format. They look at the API from different angles like usability, security and compliance. The developers then create a Profobuf schema file that contains the data structure and service definitions. See a sample Protobuf file in “Figure 2: order-service.proto”.

Once the Profobuf file is ready, the developers commit the file to Git.

Creating gRPC service mocks

When you have the Protobuf definitions, the consumer microservice team can start constructing the gRPC service mocks. They will then connect the consumer microservice to these mocks. It will allow them to develop without relying on the producer microservice.

A developer will start by checking out the project with the Protobuf file. Then, they will need to use the gRPC mocking tool. There are a number of them listed on Wikipedia. For example, from the OpenSource bucket, there are Camouflage, Gripmock and Mountebank-gRPC. There are also commercial tools like Traffic Parrot (disclaimer: I represent Traffic Parrot).

The commercial tools can help you, for example, by generating the mocks from the proto file, so you can focus on developing your prod code instead of spending too much time creating the mocks. You will have to pay for the commercial offering, though.

When you choose an OpenSource tool, there are no license fees. Also, if you know how to code and have the time, you can help improve the tool and fix any bugs if you find them.

Once the developer has created the gRPC service mocks in the tool of choice, they will be committed to Git. Keeping mocks in Git is in the spirit of keeping everything-as-code.

Since the gRPC service mocks are ready, it’s time to build a Docker image combined with the tool and the mocks. In our example, GitHub actions pick up that the definition of the mocks has been changed in Git, build the Docker image and publish it to the company Docker registry. There are a few other variants to this approach, for example, keeping mocks outside the gRPC mocking tool Docker image, which is out of scope for this simple and focused article.

Now that the mocks are ready, we can use them to help develop and test the consumer microservice!

Develop and test in isolation the consumer microservice

A developer working on the consumer microservice will face a problem. The producer microservice is not ready yet, as the producer team is actively developing it. So the consumer team developer cannot quickly develop and test the microservice as the gRPC API she wants to consume is not ready yet.

gRPC service mocks come with help. The mocks will “simulate” the producer microservice server side so the consumer team developer can develop and test. That way, both the consumer and producer teams can work in parallel.

Often the same person working on the production code would have been the one creating the mocks.

The developer will start by running locally on her laptop the Docker image with the gRPC service mocks.

She will then code the microservice while it’s connected to the locally running gRPC service mocks. Those mocks are “simulating” the server side – the producer microservice. She is working in “isolation”, meaning that she does not rely on any external APIs or services and can configure the mocks herself as needed for the tests. Typically she will write automated tests and follow a BDD process of discussing with relevant stakeholders and capturing the requirements in spec-by-example or BDD tests.

It’s worth mentioning that the developer often might find issues with the gRPC service specification during this stage. For example, issues not anticipated during the design stage. It is critical the developer communicates any concerns and feedback about the API specification with the producer team and whoever else is involved. It’s easier to change the specifications early in the API software lifecycle. An example of such a feedback note would be a usability issue with the API based on real-code usage or missing data in a data structure.

She then checks her changes into Git and pushes them to the remote repository. The CI pipeline runs. It tests and builds the microservice artifact. We will look at the specifics of that in the next paragraph. Once CI/CD completes, QAs might pick up the artifacts and test the microservice locally. Then QAs potentially test it in a shared environment as well.

Let’s have a closer look at a sample CI/CD loop.

CI/CD for the consumer microservice

Once the microservice coding is done, the CI pipeline can run and create the microservice artifacts. It will run the tests that the developer has written.

The CI agent will check out the microservice project. It will then start the Docker image with gRPC service mocks inside the CI agent just to run this one build. Then it starts the microservice and runs all tests locally. The tests are hitting the microservice APIs, which in turn communicate with the gRPC mocks of the producer microservice. The mocks allow the tests to pass even though the backend producer microservice does not exist yet. The pipeline ends with producing a tested microservice artifact.

Communicate changes using Git PRs

It is important to remember that the API specification is a living organism. It should be updated and moulded based on learnings during development, testing and early usage of the services. The changes to the Protobuf specifications need to be communicated effectively to all interested parties.

One way of communicating changes is by using PRs (pull requests).

To propose a change in the API specification, a developer will pull the project with Protobuf files and make changes to those files. He will then create a PR with the proposed changes.

Then, the other team or involved parties can suggest changes to the proposal. The involved parties, such as the producer team, consumer team, architects, product owners, and QAs can go backwards and forwards to make sure the changes meet their requirements. Once the changeset is confirmed, it gets merged into the master, and the new Protobuf files use in both producer and consumer microservice. The mocks might need updating as well. Some commercial tools might help you with that by highlighting which mocks are not in line with the new spec. If you use the OpenSource gRPC mocking tools will assess the change needed and then update the mocks manually.

Test the producer and consumer microservice together

Once the producer and consumer microservice are ready, the QAs can test them together. There will be no mocks used here anymore between the producer and consumer microservice. We do want to test if there are any when real microservices communicate with each other. You might need a gRPC mock to decouple yourself from other microservices and dependents, though (for an example, see the “Backend Integration Test Environment” slide at 13m21s).

Both microservices can be started in a shared testing environment and manually or automatically tested. Once tests pass, the artifacts are promoted and ready for release.

Getting started is simple

Getting started with parallelising work by designing APIs before coding them can be done on both a small and large scale.

The good news is you can start with just one API and two teams. You don’t need to boil the ocean and migrate all your teams simultaneously. Once the project has been proven successful, scale to more groups.

You can use the spreadsheet from this article to calculate the ROI (Return on Investment) you will see when you implement this workflow. The article describes how to use the sheet. If your situation is different, feel free to contact me, and we can work on an ROI model for your specific situation.

Next steps

Learning new things is fantastic, but the knowledge does not yield returns if you don’t use it!

If you are an architect or a software developer, it’s time to talk to your team members about this workflow and see if it applies to your situation.

If you are a team lead or manager, ask your team what they think about this workflow. Check if it would solve a critical problem you currently face, for example, a team waiting for another team’s API to be able to continue working.

If you have any questions, do not hesitate to reach out to Wojciech Bulaty directly via LinkedIn or email him at wojtek@trafficparrot.com.

About the author: Wojciech Bulaty specialises in enterprise software development and testing architecture. He brings more than a decade of hands-on coding and leadership experience to his writing. He is now part of the Traffic Parrot team. He helps teams working with microservices to accelerate delivery, improve quality, and reduce time to market by providing a tool for API mocking and service virtualisation. You can follow Wojciech on LinkedIn.