For many companies, development and operations are treated as separate entities – often at odds with one another. However, the benefit of combining them is starting to be recognized and most companies that are ahead of the game have turned to DevOps as a way to improve their development process. At the same time, there’s been a huge push towards using cloud-based computing.

This has many benefits. Firstly, using the cloud helps with DevOps goals of streamlined, continuous integration, delivery, testing and monitoring. It also makes remote work much easier, giving you a real advantage in the current situation when a lot of development discussions are taking place using teleconference services, rather than in person.

With so many changes in these fields it’s worth making sure you’re up to date. So, let’s take a look at some of the best practices for DevOps in the cloud.

Be Security Conscious

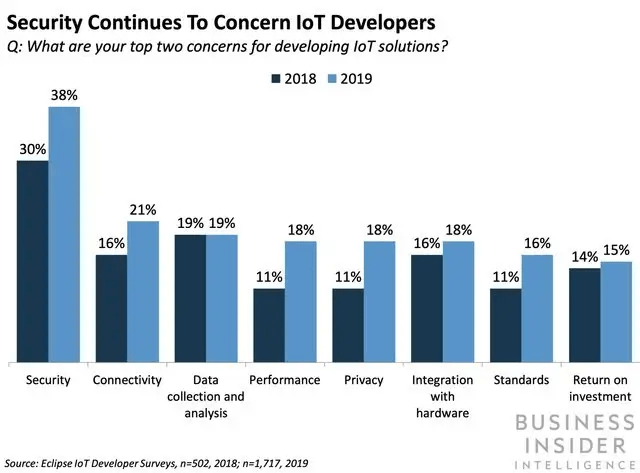

One of the most common mistakes in any emerging field is not paying enough attention to security. This is particularly noticeable when it comes to the cloud. That’s as security standards change frequently and different technologies may rely on different methods. Cybersecurity is a particularly important field due to how it can interact with other applications. As the IoT (internet of things) continues to grow, the risks of a security breach are no longer restricted to your desktop computer. Anything linked to the internet – smartphones, smart cars, even your kitchen lights! – can be affected.

Rather than relying on everyone in your team to stay up to date, it’s better to hire a security officer. This role involves keeping up with the best security practices for the DevOps process when using the cloud, and implementing appropriate solutions.

Of course, you could go one step further. DevSecOps is an up-and-coming field, encouraging security to become part of the general development process. If you have a larger team, it could be worth spending time building a more thorough development process with an emphasis on security.

One helpful solution is to use identity-based security models. These only allow authenticated members of a team to access certain technologies or files. You can expect an IT team to react well to this. However, you may find less technologically educated members of the wider company could struggle.

Some staff will assume that, if things are only shared using a video call app with security measures in place, there’s no risk. They may find it frustrating to have to authenticate their identity every time they access something, especially if they know the details have only been shared within the company. This is where having a dedicated security officer really comes in useful, as it frees up the development team from having to also do some level of training and tech support. That’s likely to be very popular with that team!

Choose The Right Tools

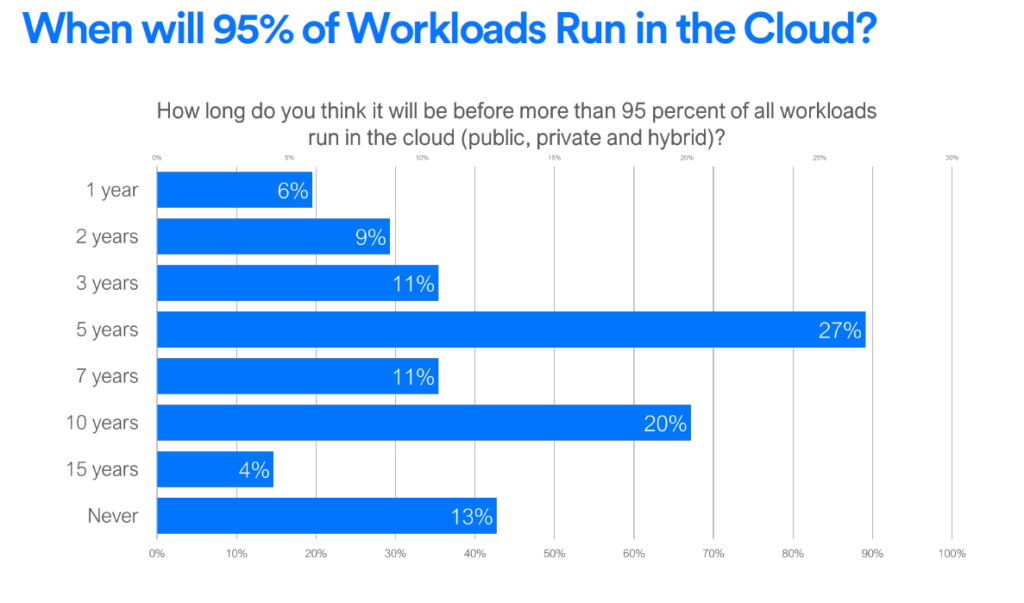

There’s one big advantage to starting out in a new field, and that’s the ability to choose the right tools from the beginning. With many companies expecting to be fully in the cloud eventually, it’s worth getting in early. Rather than having to fall in line with what a company has already invested in, you can research the best possibilities, and make your case.

Finding and leveraging the right tools is especially important for using the cloud. There are so many options out there, and it can be tempting to want to choose one and specialize. However, as it’s such a fast-moving field you don’t want to end up locked into something that may not suit you further down the line. Familiarizing yourself with a variety of tools will allow you to pick out those most suited to your project. That’s rather than relying on just the ones you know. Luckily, some tools can be combined into packages – UCaaS (unified communications as a service) is a great example of this.

Choosing tools that work with different clouds allows you to seamlessly change, depending on the business needs or project specifications. It also allows you to use the same tools on both public and private clouds, rather than using one tool for backend work and having to adjust to another for more public-facing aspects.

Sometimes, choosing the right tools involves knowing when not to make use of the cloud. A hybrid of cloud and edge computing is often best suited to many companies. Understanding why certain things work and others don’t is an important part of having a successful hybrid strategy. While the cloud has many advantages – such as being globally accessible – it has some disadvantages too.

There is often some latency between the cloud and your access point, and there are some areas in which this can be challenging to manage. If, for example, you have an inventory management system, then you want it to be accurate. If there’s a level of inaccuracy, you can end up overselling products. That can lead to an annoying experience for customers, which is bad for your reputation and bottom line. By keeping some tools on the edge, you can avoid these kinds of problems.

Invest in Infrastructure

How many times have you joined a project team, only to discover there’s no organizing structure in place? Most of us have been there – trying to work out cryptic subfolder names, or figure out just where that API is pulling it’s data from! While it can often seem easier to leap straight in and organize as you go, it usually ends up leading to those kinds of situations. No matter how advanced your DevOps skills are, you need a strong organizational system.

If you’re hosting a lot of services and resources in the cloud, you need to make sure to have governance infrastructure in place. At minimum, it needs to have a directory. Being able to accurately trace anything within your system is vital – and makes it much easier to keep your network secure.

Having this infrastructure in place from the start also allows you to create policies on how everything will be managed. Putting limitations in place on who can access what, how things can be accessed, and where data can be drawn from will help you control your applications better (and, once again, make keeping it secure much easier).

While any team using the cloud should be doing this, it’s particularly important for DevOps. A huge part of the ethos behind DevOps involves streamlining processes. Having a good infrastructure is an essential part of doing that. If you’re making cloud-native apps – especially if you’re working in SaaS development – investing in this upfront will save you a lot of time and money in the long run.

Test For Performance

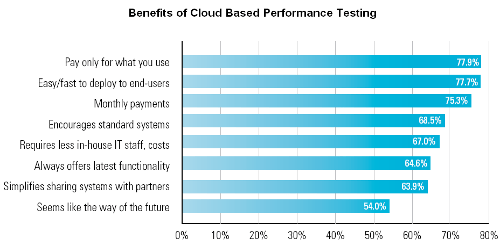

One common mistake when working in cloud deployments is letting performance issues slip by. It can be very easy not to notice them, especially when working on the backend of a program. This can lead to problems being found by customers or clients – likely leading to a bad experience for them. It also puts pressure on your team, as they will have to replicate the issue and come up with a fix on the customer’s timescale, rather than their own. This is unnecessary pressure, and is an issue that can be easily avoided by increasing your testing upfront. However, rather than waste your DevOps team’s talent on repetitive tests, take advantage of automated performance testing.

As well as testing everything before deployment, automated testing allows you to test your applications at regular intervals. This increases the chances that you’ll notice issues before your customers do! It’s particularly useful if you’re regularly rolling out patches, or if the cloud on which the application is hosted has updated recently.

It’s much easier to run automated testing in a cloud-based system, as you’re not reliant on having sufficient hardware to run it. A huge benefit of using cloud hosts is the ability to scale up and down as needed. This is particularly beneficial on the testing side of things. It allows you to do large-scale and thorough tests before deployment, as well as smaller scale testing on a regular basis. That’s all without worrying about maintaining servers for that specific purpose.

This has an additional benefit from the business side of things too. If you’re hosting your applications on a public cloud, they may try to compensate for poor performance by assigning more resources to you. This can result in a hefty – and wholly unnecessary – bill.

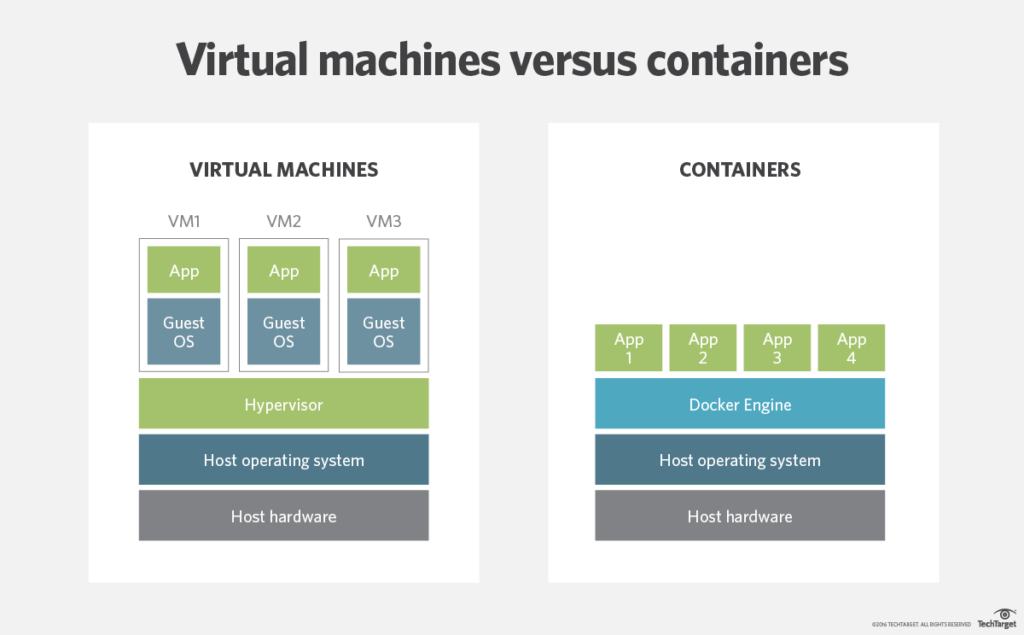

Use Containers

Containers are a particularly useful bit of technology when it comes to cloud computing. They allow you to compartmentalize applications. It means you can work on certain areas without worrying about the impact on others. For instance, you can keep your inhouse VoIP phone system in the same place as any extensions you might have for it – and well away from external clients.

You can provide microservices in a cluster of containers, which makes them much more portable. They’re easy to deploy, and easy to update as well. However, it does create the additional challenge of managing multiple versions. This is why investing in infrastructure and testing is important, as it allows you to reap the benefits of containers whilst mitigating any potential issues.

Of course, not every application is suited to containers. Before moving everything to being container based, it’s worth working out what you’ll gain from doing so. Just like choosing which tools to use, you may want to use a mix of approaches rather than all or nothing.

Commit To Ongoing Training

With both DevOps and the cloud being relatively new, it’s worth ensuring anyone working with them is properly trained. It can be tempting to only train newcomers, but as the fields evolve, you should expect constant changes. Committing to continuous learning means you and your whole team will remain up to date and ready to tackle any problems or events that might arise.

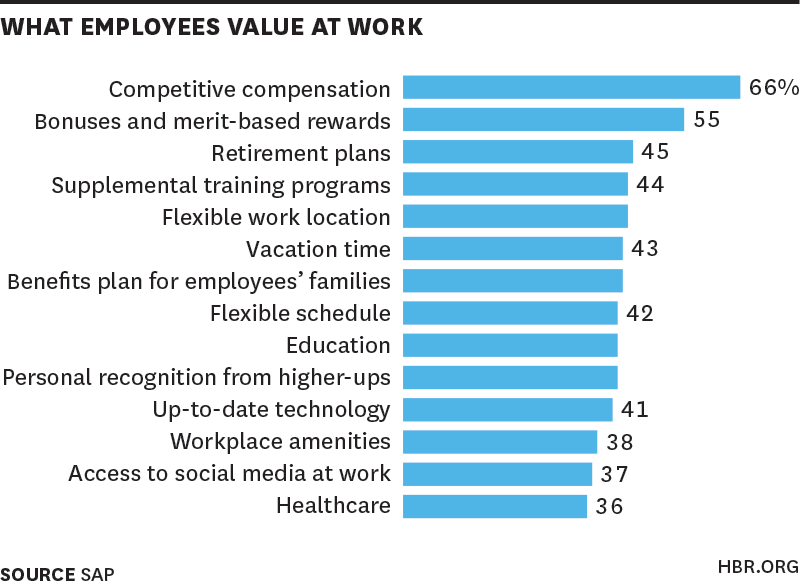

It can be challenging to persuade management that ongoing training is financially worthwhile. That makes it worth noting that it’s easy to do so remotely and securely, thanks to the rise of VoIP communication. It may also be worth pointing out that a lot of research into employee satisfaction notes that access to continuous learning plays a huge role.

If you have the opportunity, it’s worth investing in training that goes beyond the immediate scope of any team member’s role. That’s not to say it should be completely outside their field, but ensuring your DevOps team have a basic background in cybersecurity will help keep your applications secure. Equally, ensuring your non-IT teams have an understanding of how the cloud works will make it much easier when they’re either using or selling your applications!

What next?

DevOps and the cloud have huge potential for business savings in the long run, but they do require investment up front. This investment isn’t just monetary – it’s an investment of time too. Many of these best practices can seem time-consuming (especially getting the infrastructure in place before beginning, rather than as and when). However, it’s definitely worth it.

By bearing these best practices in mind, you’ll find that developing, deploying, and maintaining applications is much simpler. Of course, as with any cutting edge field, these might change. The most important thing is to watch for developments in your field and to implement them as possible. This is especially relevant when it comes to security. Understanding the current state of things, both within your own application and in the wider world, is the best way to ensure you’re not at risk.

It’s also worth keeping an eye on other cloud platforms and making sure the ones you’re using remain the best ones for you. It’s not worth staying with one that doesn’t offer everything you need – working around something is never as effective as being able to work with something.