Not all data scientists have a computer science background – I received my bachelor’s degree in Statistics, for example. As I moved into data, I noticed that I was good at scripting, but was not able to create a ‘production-quality’ code. A fundamental step in writing production-quality code is the ability to log properly. In this article, I will explain how to log in Python.

First, we will explore why logging is a fundamental part of any real-world application. Next, we will look at the main actors in Python’s logging module. Each actor is introduced in both a theoretical and a practical way. We will become familiar with each of these actors by writing simple code examples.

At the end of the article, an example of how to embed the logging library in a structured data science project is shown. All the examples used in the article are available in my GitHub repo. You can clone this and play with the examples.

Why Logging

Logging is the key to enabling diagnostic auditing. With a proper log file, it’s possible to know what has happened in the application. Has the application run smoothly? Has a weird error occurred? Does the model perform as expected?

Let’s make these questions more practical by defining an example that we will carry through this article.

We have just developed an application that makes weather forecasts.

Our application works as follows:

- Feature collections: we collect data about air pressure, wind speed, and the presence of clouds in the surrounding area. Each feature has one dedicated sensor and data is transmitted using its API;

- Weather forecasts: we select a trained model and use it to predict the weather of the day;

The project is composed of four simple Python files:

-

|-> features_ingestion.py

|-> make_prevision.py

|-> utils.py

|-> main.pyLet’s focus on the features ingestion phase. The script that performs features collection is the following:

If we launch the script from the terminal and one of the APIs does not work, we will see the not easily human-readable standard error message on the console.

To make the error more readable we can insert a try-except block with a print statement like the one shown at line 13:

Now we can easily figure out which API is not working… if we read the stdout and the stderr on the console!

Our boss is very happy with our work. Our weather forecast is so accurate that everyone checks it to decide whether or not go for a hike in the countryside! To improve the application, he has decided to make a forecast every hour – 24 times a day.

Geat! So, we rent an AWS instance, set up the environment and the crontab, and leave the office to go home in a great mood. The next day we arrive at the office at 9 am and… our boss is yelling! The application did not work all night – it only started to work again a couple of hours ago! Our boss ‘gently’ asks what happened, and we are unable to answer. The stderr and stdout were lost when the application finished!

To avoid this situation, we have to add logs to our application!

A logger is an object that sends messages outside the application for example, in a text file. By looking at this text file we can find out what happened in our application after the application had finished its run.

As a detective, it is important to know what happened, and both where and when it happened. When we write something in the log file, we must be careful to include all these ‘w’s’. In addition, it is important to provide a priority scale in the log file (a log that notifies us that the application is going to explode should be more visible than a log that indicates that everything is ok).

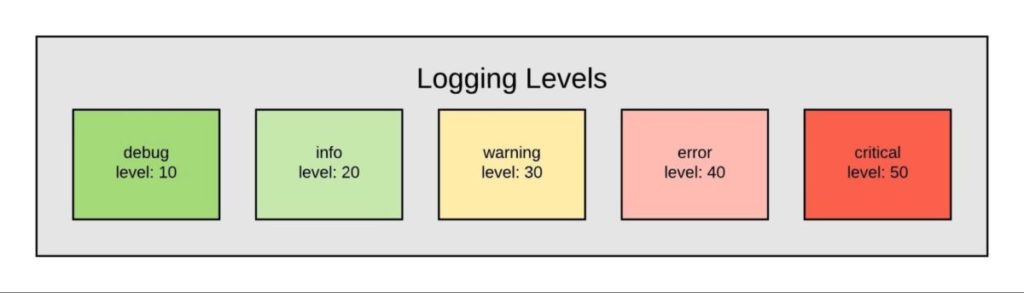

The logging priority scale in Python consists of the following levels:

- DEBUG: the messages written in this level provide detailed insights about the application. It is typically used by developers when they find a bug. Have you ever embedded a print statement to check the status of the variables when you have found a bug? If you log a message in the ‘debug level’ instead, you can avoid having to remove the print statement when your debugging phase is over, and you can reuse the logged message when the next bug appears 😉

- INFO: in this level, the messages are used to confirm that the application is running smoothly. We can regard the messages at this level as being the checkpoints of our program.

- WARNING: the messages written in this level provide insights that indicate something unusual is going on. The program should be able to handle the problem, but the output could be weird.

- ERROR: as suggested by the name, the messages in this level suggest that the application is not working correctly. The output will definitely be affected.

- CRITICAL: the highest level. The application will stop running soon and will no longer produce an output.

Luckily for us, the logging module in Python incorporates these properties (the three w’s and the priority levels) with almost no effort.

Logging module: intro and main actors

The Python logging module (called logging) defines functions and classes to include structured logs in our Python application.

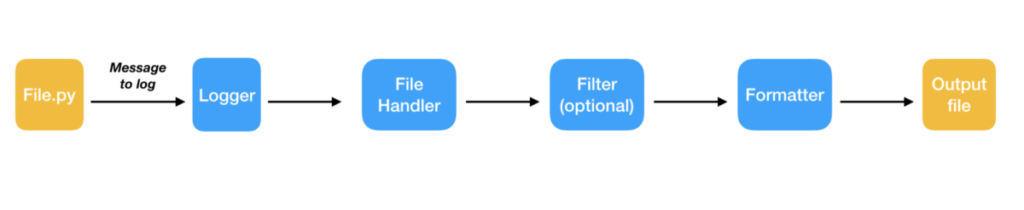

The following diagram illustrates the flow of a Python program that writes a message into a log file.

As we can see there are three main actors:

- LOGGER: this is the main class of the module. We ‘interact’ with the logging system through this class of instances. Using its methods, we can write log messages in the log file.

- HANDLER: this class determines where the log messages will be written.

- FORMATTER: this class is used to decide the format of the log file. With a proper formatter, we can easily add the ‘three w’s’ to our logger.

What follows is a a set of bullet points for each of the three main classes that roughly outlines their main methods and initialization functions. Don’t worry if you don’t understand the functionality of the methods – the examples below will make these clearer.

Logger class methods

To get an instance of the Logger class we have to call the function getLogger(logger_name).

Once we have a Logger instance we can call the main methods:

debug(message): to log a message at the debug levelinfo(message): to log a message at the info levelwarning(message): to log a message at the warning levelcritical(message): to log a message at the error leveladdHandler(handlerObj): to add an handler objectsetLevel(logging_level): to set the level from which the logger starts to log.

For a more practical example, let’s create the first script to embed the logging module:

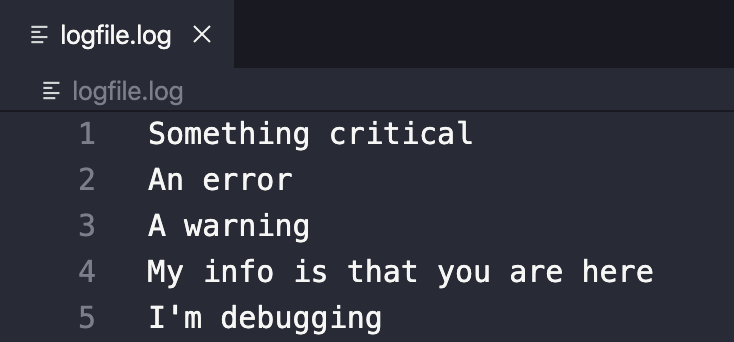

If we run the script, the output on the console will be:

Something critical

An error

A warningOnly messages with a level equal to or higher than ‘warning’ are output! This happens because the default configuration of the logging module is the warning level. To output all the messages, we have to use the setLevel(logging_level) method.

The output on the console is now:

Something critical

An error

A warning

My info is that you are here

I'm debuggingHandler class method

The function to call to instantiate an handler object depends on the output source that we want to use (I know this title is not 100% correct – we have several handlers, but I think it is better to imagine the handler as a ‘unique’ object that sends messages to the desired stream). The most commonly used functions to instantiate an handler object are the following:

StreamHandler(): to instantiate a Handler that sends messages to stdout and stderr.StreamHandler(file_path): to instantiate a Handler that sends messages to a text file. (There are many varieties of FileHandler such asRotatingFileHandlerandTimeRotatingFileHandler)

However, we can instantiate handlers to send emails or to send messages through TCP/IP sockets or HTTP servers!

Check the logging.handlers module documentation for more info!

The main methods of a handler are:

setFormatter(formatter): to determine the formatter of the handler.setLevel(logging_level): to set the level from which the handler starts to log.

NOTE that the level of the handler should be higher than or equal to the level of its logger.

Let’s get our hands dirty by adding a Handler to our previous example.We’ll log in a file named logfile.log.

Now we have an empty console. All the messages are in the following file:

Let’s make things a bit more amusing.

We can log with the same logger both on the console and in the log file. On the console we will log all the levels (we would like to see our whole “print”), but in the file we will log from the warning level, so that we track (and keep) only records regarding the problems that actually occurred.

The code to perform this task is:

The output is presented in this funny gif 😉

With a simple line we are able to log in two different places with two different levels! How cool is that!

Formatter class methods

To get an instance of the Formatter class we have to call the module’s function Formatter(). This class does not have methods. The Formatter constructor function takes as input three optional arguments: a message format string, a date format string, and a style indicator. The core argument is the message format string – let’s focus on that. The message format string defines the structure of all the messages that will be written. The message format string is composed of LogRecord attributes: you can see the list of LogRecord attributes here. The key attributes are:

- levelname: the log level of the input message

- message: the log message itself.

- asctime: time at which the log method is created

- module: the name of the module that contains the message.

- funcName: the name of the function that contains the message.

- args: list of arguments used to call the associated function or method.

With the former list we are able to programmatically write the ‘three w’s’ and the priority scale in our log messages. With the level name we write the priority scale. With the message LogRecord attribute, we answer the ‘what?’ question. With the asctime LogRecord attribute we will define the “when”. With the remaining LogRecord attributes we define the “where”.

Let’s add a formatter to our handlers:

Now the output on the console is:

2020-06-09 21:48:07,825~CRITICAL~Something critical~module:log_hand_log_1

2020-06-09 21:48:07,825~ERROR~An error~module:log_hand_log_1

2020-06-09 21:48:07,825~WARNING~A warning~module:log_hand_log_1

2020-06-09 21:48:07,826~INFO~My info is that you are here~module:log_hand_log_1

2020-06-09 21:48:07,826~DEBUG~I'm debugging~module:log_hand_log_1Code language: JavaScript (javascript)Can you see the power of the logger? We did not change the messages in lines 17–21, but we have decorated them with the timestamp, the level, and the module where the messages were generated. These insights cost nothing!

We can also provide different formatters for different handlers of the same logger. In this way, we can have a ‘cleaner’ output on the console and a ‘detailed’ output in the log file.

From theory and dummy examples to the real word

Now that we have the power of logging at our fingertips we have to use it to make our boss happy. Let’s return to our weather forecast example and embed the logging module in the application.

Impulsively, we will add the logger to the script that is most likely to raise an exception: the ‘famous’ ingestion script. Without thinking too much, we will cut and paste from the code of the tutorial above. The result is the following code:

We instantiated the logger within the lines 5–18 (too many lines!).

We log messages in lines 21, 29, 33 and 38.

Now, if an API does not work, the program will write a record like this in the logfile.log:

2020-06-10 00:20:28,899~CRITICAL~API <class 'utils.AirPressureApi'> did not work~module:ingestion_weather~module:feature_ingestionCode language: HTML, XML (xml)Thus, when we arrive at the office in the morning we can easily see that at twenty minutes past midnight the ingestion script experienced an error caused by the Air Pressure API.

But… what if we want to embed the logging module in our application’s other scripts as well?

Let’s quickly recall the structure of the application:

-

|-> features_ingestion.py

|-> make_prevision.py

|-> utils.py

|-> main.pymain.py will call features_ingestion.py or make_prevision.py

In order to write a in a unique log file for all the logs from the two scripts, we have two options:

- Instantiate a unique logger object in main.py and pass it to the other scripts.

- Instantiate many logger objects with the same handler and the same formatter. Each logger object will therefore send the output of its module to the same location, with the same format.

Python’s convention is to create a logger instance for each module. The name of each logger is the name of the module. In order to keep the settings (handler, levels, formatters) the same, creating a ‘logger factory’ module is recommended, like this:

With the creation of the logger factory module, our application is now structured as follows:

-

|-> features_ingestion.py

|-> make_prevision.py

|-> utils.py

|-> main.py

|-> mylogger.pyNow, the ingestion script will be:

and the make_prevision.py will be:

If an error occurs in the ingestion phase at midnight, and another error occurs in the make_prevision script at one a.m., the next morning we will find the following logfile:

2020-06-10 00:00:00,899~CRITICAL~API <class 'utils.HumidityApi'> did not work~module:ingestion_weather~module:feature_ingestion

2020-06-10 01:00:00,899~CRITICAL~Problem in loading~module:make_previsionCode language: HTML, XML (xml)Conclusion

In this tutorial we have seen why logging is a key element when we have to put our code into production. We have seen the main actors of the Python logging module; we started by creating dummy examples to get our hands dirty, and by the end, the logging module was embedded in a little ‘real-word’ application.

All developers make errors and all programs crash. Highly successful developers have the ability to quickly identify and solve the problem. The logging module is one of the most powerful tools at our disposal to easily identify the source of any problem.