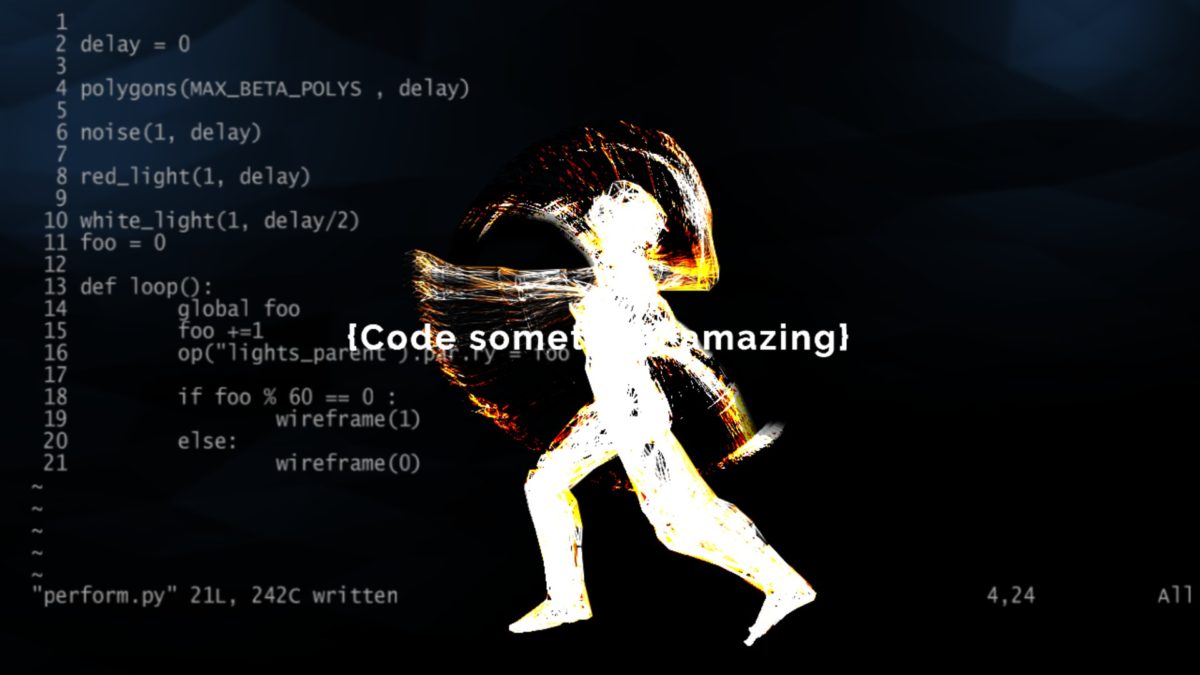

Codemotion Rome 2019’s opening performance combines dance, motion capture, live coded generative visuals and live music performance.

Join us in this article to discover how we used WebSockets, Vim, visual applications such as TouchDesigner or Blender and much more to build the infrastructure behind it.

Codemotion 2019’s opening performance was such an entertaining experience and just as inspiring was the process that we as Chordata New Arts went through to give birth to it.

As the artistic branch of the emerging tech project Chordata, we are always confronting the new relationships between technologies, media and artistic languages.

This work is powered by Chordata’s homonymous open-source motion capture framework.

Discussing with Codemotion our intentions for the opening show with co-founder Mara Marzocchi, we were both immediately intrigued by the idea of combining dance, live coded generative visuals and live music. This led to a performance where the dancer’s movements gave life to a digital body, transforming through time and space.

Join us in this article to discover how we used WebSockets, Vim, visual applications such as TouchDesigner or Blender and much more to build the infrastructure behind the performance.

A microservices-like infrastructure

Many of you might have heard of the emerging trend of Microservices. As artists, we just recently came across the concept and noticed how, interestingly enough, our systems and workflows spontaneously evolved to resemble the patterns and methodologies of that architecture, where a group of autonomous services contribute to a part of the process in a modular fashion.

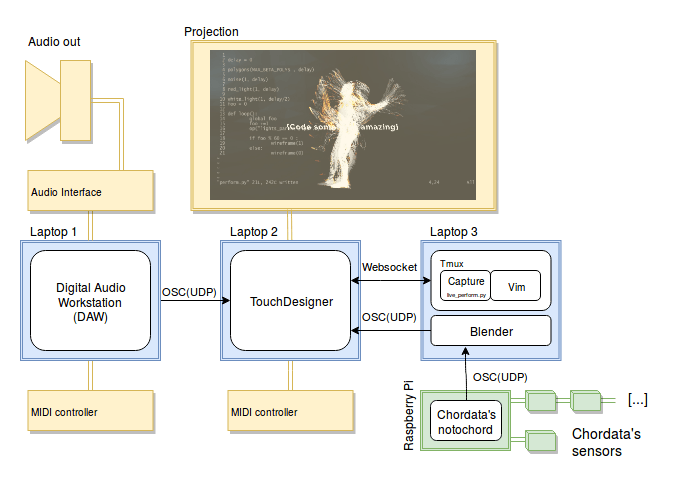

Here’s a representation explaining of how the machines involved in our system cooperate:

Our systems and workflows resemble the patterns and methodologies of a microservices architecture.

Each laptop is processing one conceptual and technical part of the whole computational process that powers the performance, while exchanging informations with the others.

One laptop is being used for real-time audio synthesis, another for real-time video processing and a third one for handling the motion capture data and for the live-coding input. A microcomputer attached to the performer’s body takes care of managing the sensors.

Let’s dig deeper into the details.

Motion capture

The core of the motion capture system is the service running of the Raspberry Pi: the Chordata Notochord. Its job is to read values coming from the motion sensors, processing this data and sending it via OSC (a flexible protocol built over UDP, very popular among digital artists) to a laptop running Blender.

The open source motion capture framework Chordata, and 3D manipulation software Blender are used to get the live movements of a performer on stage.

The real-time motion data is processed inside Blender, through an operation of forward kinematics. This sums up to a fancy way of saying that they end up representing a humanoid figure. This newly acquired data is sent to the core visual processor: TouchDesigner, a flow based, graphically oriented development platform.

Once again, the protocol of choice for this communication is our beloved OSC.

Real-time visuals

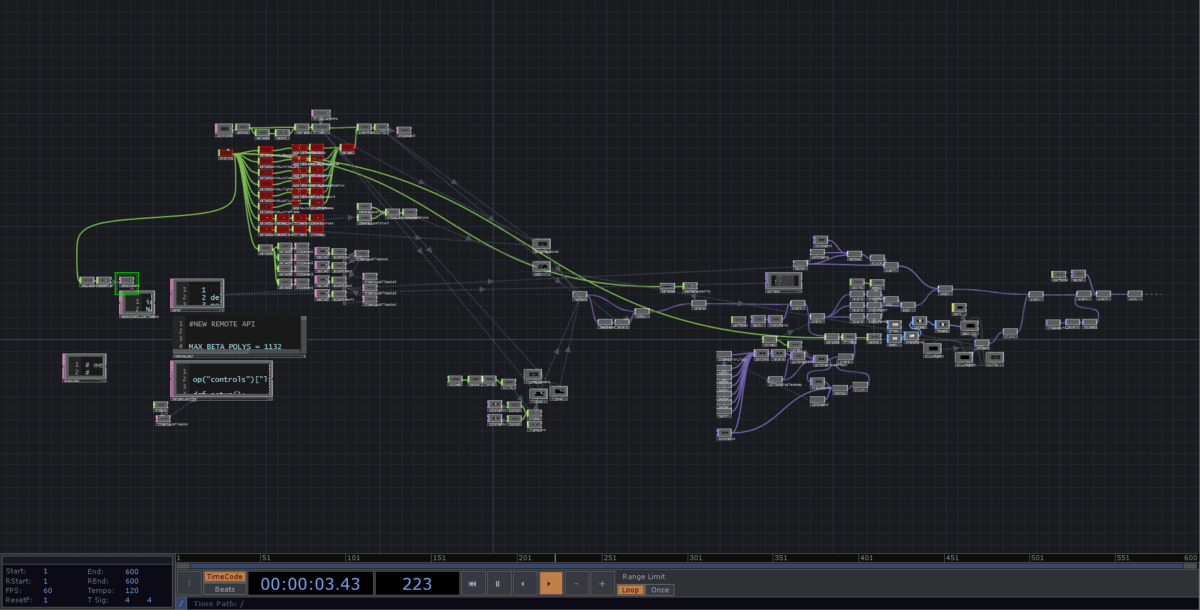

The backbone of the visual processing is created assembling a network of nodes inside TouchDesigner.

One of the first tasks performed is receiving, interpreting and visualizing motion capture data. The incoming movements are applied to a 3D humanoid model. Further controlled deformations are implemented to give us the ability to move towards more abstract dimensions.

Several 3D and 2D image manipulation processes are implemented as a network of nodes inside TouchDesigner.

The nodes in the middle of the flow are responsible for setting the model into a 3D world with virtual lights and camera. The 3D space is then flattened into a two-dimensional texture (Rendered). This will be fed into further nodes, allowing 2D texture manipulations. You can spot in the screenshot above how all the nodes on the right side of the network are violet. In TouchDesigner, this is the color highlighting nodes working with bidimensional textures.

The source image will be finally composited with the code being written and streamed live.

Audio reactive visual effects

You might have noticed, in the diagram shown at the beginning of the article, how our Digital Audio Workstation communicates with the machine running TouchDesigner through OSC messages. These are numerical representation of the results of a real-time envelope following operated on the audio being produced. This allows us to achieve audio-reactive effects, using the sound intensity as a parameter for our video effects.

Live coding

Although TouchDesigner being a flow based programming environment, one of its wonders is being completely scriptable with Python.

The pink nodes on the left of the flow form a Websocket server that listens for messages containing Python code to be executed. This allows us to inject arbitrary Python code written to control the operators in the TouchDesigner flow, making the live-coding approach possible.

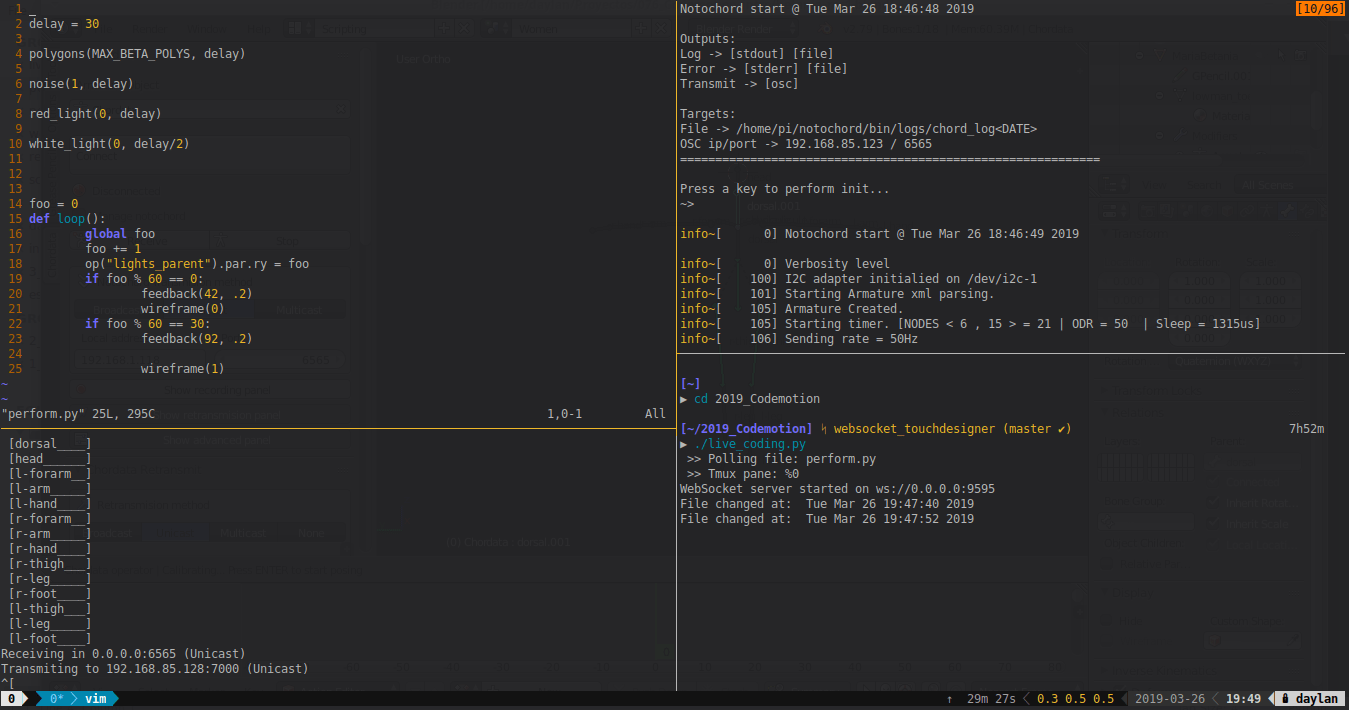

Websocket communication with TMUX and Vim

If you came from a Unix-like operating system you might already be familiar with TMUX (Terminal Multiplexer). Apart from being a great productivity tool, TMUX is what allowed us to display the code on the screen. Under the hood it works by storing the content of every pane in an internal buffer. The contents of that buffer can be retrieved with a convenient CLI interface. We leveraged that possibility to create a simple program written in Python, that sends the content of a particular TMUX pane on every keystroke.

Code is captured from a terminal emulator session and streamed to the laptop in charge of the graphical processing.

On the captured pane, we can issue shell commands, or use a console text editor like Vim to edit the code to be sent.

However, when there’s code there might be errors. In particular if that code is being written live to control a dynamic process. In order to retrieve the error messages from the TouchDesigner runtime a full duplex communication was vital. That necessity made clear that the adoption of WebSocket for this task (instead of UDP-based OSC) was a no-brainer.

Is that all there is?

We tried our best to give you an insightful, while not overwhelming, overview of our work and we deeply hope you can take something from it and put it in your own creations.

Obviously there would be much more to say for each of the topic we briefly discussed. That’s why we gathered some resources for you to learn more about these subjects:

- Chordata Wiki

- TouchDesigner Wiki

- Blender Python API Docs

- Introduction to Open Sound Control (OSC)

- Introduction to TMUX

Feel free to contact us if you’re interested in knowing more about our creative processes, techniques or literally whatever you can come up with. We are always open to share our knowledge and experiences with the world 🙂

You can find the sources used for the performance on our repository.