Deep learning promises to deliver a true revolution in how we tackle complex problems. But to most people, deep learning seems like some arcane dark science. However, it is one of the key planks that enabled voice assistants, such as Alexa, to get so proficient.

One of the biggest challenges for voice assistants is learning different accents and languages.

In the runup to Codemotion’s first online conference on deep learning, I explain how deep learning helps Amazon Alexa to understand us and how we, developers, can help her do better. We will look at deep reinforcement learning, phonetic pronunciations, and its speech synthesis markup language.

What is deep learning?

Deep learning allows computers to beat humans at Go. It has been used to identify cancers in mammograms. It can even create its own language. Deep learning is a form of machine learning. In deep learning, you use deep neural networks to identify patterns in data and to make connections between these.

Deep learning was proposed roughly 15 years ago. But it only really became mainstream when we began to develop sufficiently powerful computers capable of running the deep neural networks.

How does Alexa use deep learning?

Believe it or not, Alexa has now been about for over 5 years, arriving with the first Echo speaker in Autumn 2014. Since then it has developed enormously. Many of Alexa’s skills have been powered by deep learning. Some of the changes have been subtle, like allowing it to identify where she needs help from a human to improve her speech models.

Other changes are more significant, especially for developers. For instance, this speaker uses transfer learning to make life easy for developers. This allows them to access complex domain knowledge via a set of skill blueprints. “Essentially, with deep learning, we’re able to model a large number of domains and transfer that learning to a new domain or skill,” said Rohit Prasad, vice president and chief scientist of Amazon Alexa in a 2018 interview.

Self-learning

One really important way Alexa leverages deep learning is for self-learning. When you ask it to play a song, but can’t remember the exact name, it will tell you “sorry, I can’t find that”. When you repeat the correct name, it will learn what you meant. Each time, it will get better.

Let Alexa learn accents

One of the amazing things is how good Amazon’s voice assistant is at understanding different accents. We have all seen videos of people with strong accents struggling with voice recognition. Yet Alexa can understand five distinct versions of English (Australian, British, Canadian, Indian and US)? Even more impressively, it can cope with multiple regional accents.

So, how is it that voice recognition has come so far in such a short time?

Reinforcement learning

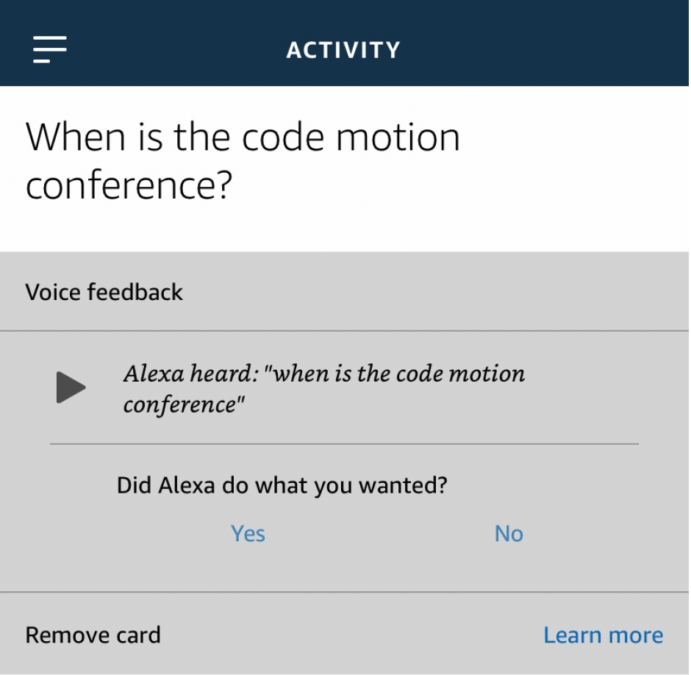

The earliest releases of the Alexa app allowed you to correct anything it got wrong. This was pure supervised learning, where each correction added to the available training data. But nowadays, all the app asks is “Did Alexa do what you wanted?”

This is because Amazon now uses reinforcement learning to teach Alexa. Reinforcement learning works by letting Alexa know whether she made a mistake. But it doesn’t explicitly teach her what the correct outcome is.

Teaching Alexa to pronounce things

Amazon’s voice assistant is pretty good at pronunciation, but she is far from foolproof. This is especially true when it comes to the names of skills (Alexa’s equivalent to an app). It usually relies on the phonetic rules she has learned. In some languages, these are really easy. But in others, like English, it can be really hard.

For instance, did you know that the letter combination ‘ough’ has more than ten distinct pronunciations, including thought, though, bough, enough, cough and through? This is hard enough, but brand names and app names often have their own pronunciations. Fortunately, Amazon offers developers ways to control how Alexa pronounces words.

Speech synthesis markup language

The main approach for teaching pronunciation is through SSML or speech synthesis markup language. This is a powerful language that allows you to control how Alexa speaks. It allows you to add pauses, inflections, and other speech effects. SSML is an XML language where you describe exactly what you want to happen.

<speak>

I <emphasis level="strong">really</emphasis> want to learn SSML.

</speak>Code language: HTML, XML (xml)Alexa’s SSML allows you to change a huge range of things. These include:

<amazon:domain>which changes the style of speech (e.g. conversation, news report, etc.)<amazon:effect>which lets you define things like shouting, whispering, etc.<amazon:emotion>which tells Alexa to sound excited or disappointed<prosody>which controls the rate, pitch and volume of what Alexa says

There are many other tags available as described here.

Using phonemes

So, how do you control Alexa’s pronunciation of a word? The answer is using the SSML <phoneme> tag. This tag uses something called the international phonetic alphabet (IPA) to learn how a word should sound. You may have seen IPA spellings of words on Wikipedia. They look like a weird mix of letters from different alphabets. E.g. Berlin (/bɜːrˈlɪn/; German: [bɛʁˈliːn]).

Many common names have different pronunciations in different countries. Take my full name Tobias. In English, the middle syllable is pronounced ‘buy’, but in German, it’s ‘bee’. So, I could explain the two pronunciations like this:

<speak>

This is how <phoneme alphabet="ipa" ph=" təˈbaɪ əs">Tobias</phoneme> is pronounced in English. In German, it is pronounced <phoneme alphabet="ipa" ph="toˈbiːas">Tobias</phoneme>.

</speak>Code language: HTML, XML (xml)Of course, with deep learning, it won’t be long before computers can learn how names should be pronounced using the same contextual clues we use.

Want to learn more?

If you’re interested in how AIs are getting better at natural human-like conversation, you should check out the Codemotion Deep Learning Conference. In particular, Mandy Mantha’s talk on Building Scalable state-of-the-art Conversational AI.