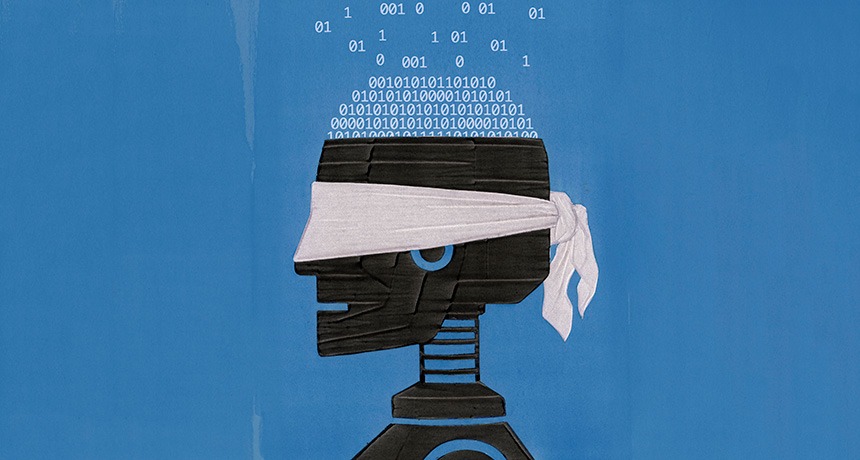

Debates about fairness across different disciplines and technologies are much older than machine learning and AI. So how can it be applied for benefits in this rather new environment?

In the video below, Developer Advocate at Google Lee Boonstra shares insights and lessons learned throughout the years in the company. By analyzing different products and research processes, Boonstra explains how fairness in Machine Learning can help make information more accessible to everyone worldwide.

More about Fairness and ML

If you want to learn more about this topic, we recommend the following links

What does “fairness” mean in machine learning systems? Berkeley

A Tutorial on Fairness and Machine Learning

Fairness ML Crash Course by Google Developers

Finding and identifying bias is an essential part of fairness in ML. These systems, many times, can reproduce human bias, so it’s key for teams to incorporate best practices and strategies that tackle this issue. The links above are very useful and include tips based on real experiences and case studies.

More about Lee Boonstra

Lee Boonstra has been working at Google since 2017. She is a conversational AI expert and has helped many businesses to develop AI-powered chatbots and voice assistants at an enterprise scale.

They are building intelligent AI platforms on the Google Cloud Platform using Dialogflow for intent detection and natural language processing, speech recognition technology, and contact center technology. She is also the author of the Apress Book: The Definitive Guide to Conversational AI