Building and scaling AI for the financial markets is complex and requires a specific approach, mindset and software architecture. When investment decisions are at stake, ensuring AI is understandable, transparent, and above all, reliable is of paramount importance. The key to achieving these goals lies in the design of a modular architecture that allows software engineers, financial analysts and developers to work simultaneously and at different levels of complexity.

Introduction

The financial industry has been disrupted by the impressive amount of information available. In particular, the introduction of Machine Learning (ML) to the equation has given the areas of investment analysis and modelling a massive push. The reason for this growth is a long tradition of financial institutions (e.g. banks, asset managers) and investment firms (e.g. investment advisors) using quantitative techniques to cross-reference data and find the best investment opportunities. The opportunity presented by Machine Learning models is to improve investment stability, flexibility, and resilience to unexpected shocks, leading to a performance that is better risk-adjusted. However, many institutions have lagged behind in adopting ML, or could not afford to handle its growing complexity. It has become clear that the critical ingredient for successful deployment of ML models for investment is having the best software engineers, financial data scientists and developers working together to design and maintain the infrastructure that runs behind the scene in order to create quick prototypes and tests, and ensure the deployment of new investment ideas. Financial AI is not a “two guys and a laptop” scenario.

The unique challenges of building AI for investment decision-making

After many trials and a lot of research, MDOTM ended up building what is referred to internally as the MDOTM Processing Unit (MPU), a production-ready machine for AI-Driven investment modelling and analysis. This solution allows the streamlining and automation of many R&D routines (e.g., data quality protocols, strategy configuration, hyperparameter exploration) while maintaining the highest standards in terms of security control and the reliability of the technology clients require. Indeed, the MDOTM solution directly tackles three significant obstacles that make building financial AI a unique endeavour: reliability, replicability, and scalability of the R&D process.

Reliability

In the context of investment strategies, reliability indicates how frequently such strategies deliver on the investment objectives (e.g. a target risk-return profile) once applied in the real world. Indeed, one common risk in model development is that in-sample estimates differ substantially from out-of-sample ones. Often, this is the result of biased data, unstable/overfitted models, or excessive reliance on backtests (i.e. historical simulations) to validate investment ideas.

Replicability

High-quality research must be transparent, replicable and,most importantly, understandable. Although the portfolio and investment management literature is vast, it is often influenced by a very strong confirmation bias, opaque datasets, or, most frequently, anecdotal evidence. This attitude can be particularly risky when developing ML models to solve investment-specific problems, because it limits the understandability and overall reliability of the process.

Scalability

Until recently, working with a growing number of clients simultaneously created an additional architectural challenge and a fundamental tradeoff between customisation and scalability. On the one hand, each new business can require many – perhaps hundreds of – client-specific customisations. On the other hand, a centralised way to monitor, maintain, and deploy becomes necessary when clients scale faster than the data scientists and developers involved in the process.

A step back: why investments need the scientific method and AI

MDOTM was founded in 2015 with the goal of bringing the scientific method into the investment process. The company has subsequently become the European leader in developing AI-driven investment strategies for institutional investors. Over the years, thanks to an approach based at the point where Finance and Physics intersect, MDOTM has attracted many talented data scientists, software engineers, financial data analysts, and developers. As their tech team likes to say, “we are a tech company that develops AI-driven technology for investment decision-making”. The company believes that “investing has become a tech challenge”. As the internet democratises access to information, the key is having the right technology to sift through data and extract meaningful investment signals from the background noise of financial markets. MDOTM has also developed a proprietary technology called ALICE™ (Adaptive Learning In Complex Environments) to build investment portfolios using Deep Learning techniques, such as Deep Neural Networks, that continuously analyze millions of pieces of market data. The combination of this unique mix of expertise has resulted in explosive growth. The company is now scaling operations internationally, looking for brilliant new software engineers to join the team.

The problem: Traditional architectures are not enough

As MDOTM grew in size and complexity, one of the biggest obstacles was designing a scalable software architecture that would allow the many people working in R&D to work seamlessly and efficiently across prototyping, engineering, and production. Two options were initially considered, namely a monolithic architecture, and an architecture built around microservices.

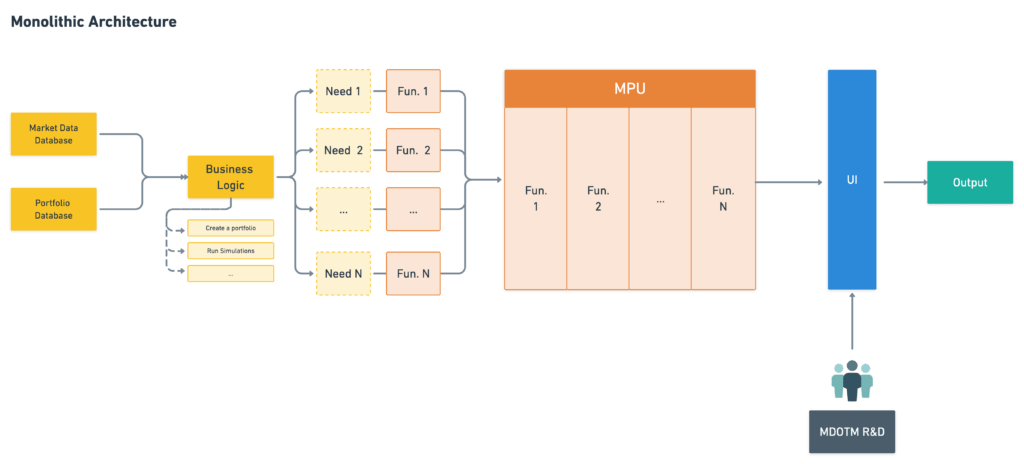

Option 1: Monolithic architecture

The monolithic approach has a long tradition in software engineering and is still used in many fields today. The term refers to an application in which a single program encapsulates all the different activities, such as data retrieval, data processing and UI. A monolithic application is then designed without prioritising modularity as the most important outcome. Although this design comes with several positives – code maintenance, for example – one of the most significant drawbacks of this architecture is that it becomes hard to maintain when requirements are not known beforehand or need to be added at a later stage of the development process, which is why the company eventually decided not to follow this path. The following diagram represents how MDOTM’s technology would have looked with a monolithic architecture.

Figure 1: How the MPU would have looked with a monolithic architecture.

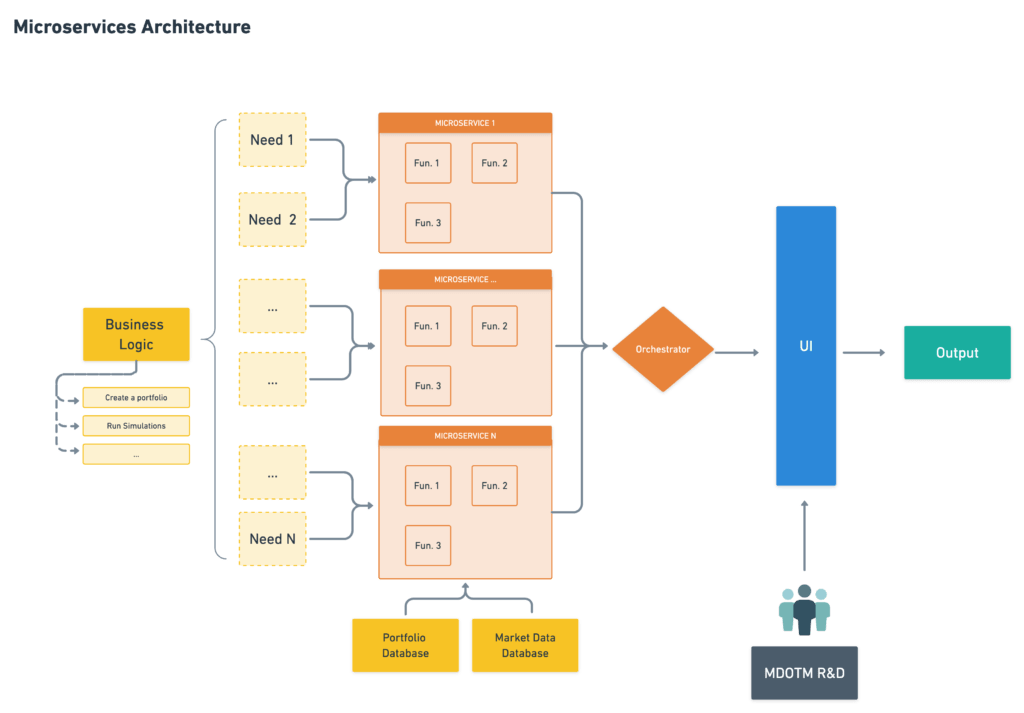

Option 2: Microservices architecture

Another approach that the company considered was a microservices-based architecture. This design overcomes many of the limits of the monolithic approach and allows different parts of the software that run a specific service (instead of working sequentially) to be handled separately. Apart from easier code maintenance, the most significant advantage of this approach is related to the technical and functional quality of the overall architecture. However, as the following diagram shows, this architecture did not match what the company was looking for. Running a microservices-based architecture would still have meant needing to know the business logic beforehand. It would still have been tough for MDOTM’s non-programmers (such as financial data analysts) to interact with the research architecture.

Figure 2: How the MPU would have looked with a microservices-based architecture.

The solution: the MDOTM Processing Unit (MPU)

After discarding several options, the Engineering team introduced an additional level of abstraction to all processes, exploiting the flexibility of nested Application Contexts for instancing generic applications. This led to the architecture being designed as a Java Spring Boot Application that runs generic Application Contexts relative to the different configurations provided each time. From a logical standpoint, the MPU is the core application to its verticals. From a practical perspective, the MPU exposes REST calls used in conjunction with Spring-compliant XML configurations, and a key-value parametrisation that updates Spring MVC applications. The Spring Web MVC framework separates the different aspects of the application (input logic, business logic, and UI logic), providing a Model-View-Controller (MVC) architecture that facilitates the development of flexible and loosely coupled web applications. By design, child applications share their lifecycle with the originating REST Call. The innovative element of this kind of architecture is the degree to which the core application is entirely agnostic of the functioning and scope of its child applications when they are launched. This level of abstraction offers the MPU the ability to execute programs defined by the caller, instead of a predefined set of services.

The benefits of a modular architecture

In addition to the requirements mentioned above, the modular architecture selected offers several major improvements on the architectures previously considered. A modular architecture:

- Has all the pros of a monolithic architecture (i.e., high performance, ease of deployment) without sacrificing code maintenance, language accessibility, and offers a high degree of flexibility;

- Allows non-developers, or non-JAVA users, to rewrite applications by recombining the different components of the main application;

- Introduces a new standard of ‘Component-as-a-Service’, meaning that developers are encouraged to think in terms of, and design, stateless components;

- Solves the memory leak problem and resolves errors linked to the unpredictable state of each component;

- Is easy to debug: exceptions are easy to spot, and the stack trace contains all the active components.

Conclusion

If the best Data Scientists and Software Engineers around choose to play in the world of investments, it is because investing is a constant ‘tech’ challenge that can be solved with skills, curiosity, and a creative mindset. Is your game, in Java and Python, good enough to give it a try?

Prove yourself and join the MDOTM Software Engineer Coding Challenge powered by Codemotion: race through three challenges for a chance to win 500€ in Amazon vouchers!