Article written by Enrico Picari, Dario Balestri, and Codemotion.

Introduction

In December 2022, the Accenture Google Business Group (AGBG) AI team worked on a project that involved a Document Understanding part. To tackle this specific part they tried applying a Large Language Model (LLM) from a paper they had read not long before. This article shares some background on the topic and describes the approaches applied and their results. The Accenture team saw a nice potential for applications of LLMs, since the documents they had to deal with were widely different from case to case, both in formatting and in wording of their contents.

They were particularly interested in testing the performances of these models in the low- or even no-data regime, through the use of in-context learning. The team was also curious whether fine-tuning such models managed to achieve satisfactory accuracy, comparable with those of older and more classical NER algorithms.

Finally, they wanted to try and deploy a LLM on Google Cloud as a Rest API service, without having to rely on proprietary APIs for our purposes.

Doc understanding pipeline

Generally speaking, Document Understanding refers to the ability to comprehend the content and meaning of a document or a set of documents. This involves various processes such as classifying input data, identifying key concepts, recognizing relationships between different contents and finally extracting the desired information. In the last decades, the development of Machine Learning (ML) and Natural Language Processing (NLP) techniques enabled computers to learn from large amounts of data and make predictions about the content of a document, allowing us to tackle several tasks such as named entity recognition (NER), sentiment analysis, topic modeling, and document classification.

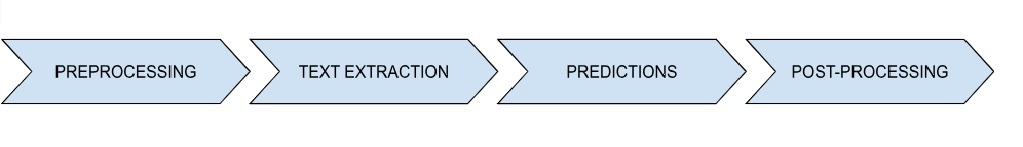

Document Understanding pipelines are usually complex and involve many different steps, but at a high-level approximation they can be split into the following steps:

- Preprocessing: depending on the task to be performed, documents must be put in a form such that they can be fed into the pipeline.

- Text and annotations extraction: if the documents at hand are not pure or formatted texts, their textual content together with words annotation must be retrieved, usually via optical character recognition (OCR)

- Prediction: this is the pipeline’s core stage, in which the data are fed into AI/ML algorithms to produce the results of interest to analyze the documents

- Post-processing: the raw results of the previous step are usually put in some standardized form to improve their quality and relevance.

In this blog, we focus on the prediction step, and in particular, explain how our approach is based on the usage of Large Language Models (LLMs). These are ML models based on the transformers architecture which gained popularity in recent years due to their performance in a wide variety of tasks, also in the field of Natural Language Processing (NLP).

The IT5 Model

T5 stands for “Text-to-Text Transfer Transformer,” which is a transformer-based neural network architecture published by Google AI in 2019. It is a powerful language model that achieved state-of-the-art results on a wide range of natural language processing (NLP) tasks, such as text classification, question-answering, machine translation, and summarization. It is trained in a “text-to-text” setting, meaning that it can be fine-tuned on a variety of NLP tasks by simply converting the input and output into a text-to-text format.

T5 was trained on a large and diverse dataset called C4, which stands for “Colossal Clean Crawled Corpus.” The C4 dataset is a web-scale dataset that was constructed by crawling and scraping text data from the internet, containing over 750GB of uncompressed text data and consisting of over 750 million web pages. The text data in C4 has been preprocessed and cleaned to remove duplicates, low-quality pages, and other noise.

While some multilingual variants of the T5 model have been introduced, their performances were found to be suboptimal for languages other than English if compared to ad-hoc monolingual variants; this is the reason why the IT5 model was introduced by G. Sarti and M. Nissim in their paper from 2022. IT5 is a version of the T5 model that has been pre-trained on Italian-only text data, that is the Italian Web Corpus, and then fine-tuned on several downstream tasks in Italian.

The IT5 model, just like many other LLMs, comes in different sizes which depend on the number of models weights:

| Model | # weights |

|---|---|

| it5-small | 60M |

| it5-base | 220M |

| it5-large | 738M |

The AGBG AI team posed the entity-extraction problem as a task of question answering (QA), which is the task of obtaining the desired output by feeding the model with the input formatted as a question. In our case we used IT5-question-answering (here is a link to the Hugging Face page of the base model), a version of IT5 pre-trained to perform QA as an open generative task; this means that the model prompt must contain the input and a question, and the answer is generated in a sequence-to-sequence fashion (see here for other QA task types).

Practically, the prompts have been chosen to be of the following format (Domanda stands for Question in Italian)

<text>. Domanda: <question>?Code language: HTML, XML (xml)as pointed out in the aforementioned paper by Sarti and Nissim.

Generally speaking, there are several ways in which one can extract information from text using LLMs. In our experimentations, we tried Zero-Shot, Few-Shot and Fine-Tuning approaches, as detailed in the next paragraphs.

Zero-Shot Learning

Traditionally, Zero-Shot Classification refers to the practice of training a classifier on a set of labels and then evaluating the model on some other labels it has never seen at training time. In recent years, with the rise of LLMs, by Zero-Shot Learning it is more broadly meant to get a model to perform a task without any specific training on it. For example, a language model trained on a general language modeling task (such as predicting the next word in a sentence) may be able to perform other tasks such as text classification or named entity recognition. Zero-Shot Learning is particularly useful when there is limited labeled data available, or where the cost of obtaining labeled data is prohibitively high. By leveraging the zero-shot capabilities of LLMs, it is possible to perform these tasks without the need for extensive task-specific training data.

When experimenting with a certain LLM, it is natural to have first a glance at the model performances on the data at hand by making predictions with a Zero-Shot approach, and this is precisely what we did with IT5 on our set of test documents. As a reference, we list here the accuracy results of the extraction of three entities

| entity_1 | entity_2 | entity_3 | |

|---|---|---|---|

| it5-qa-small | 72% | 12% | 47% |

| it5-qa-base | 60% | 46% | 53% |

| it5-qa-large | 86% | 39% | 54% |

Few-shot Learning

A natural extension of Zero-Shot Learning is the so-called Few-Shot Learning or In-Context Learning. In this case too there is no actual learning involved since there is no update on model’s weights, but differently from Zero-Shot, in Few-Shot Learning we allow the model to see some examples of input-output pairs at the beginning of the prompt, before the actual input. In this way, the model is given some context in order to figure out the expected output. The context structure may differ greatly depending on the task and optimizing it for the problem at hand is part of the realm of prompt engineering.

Within the setting of QA, a typical Few-Shot prompt may be of the following type:

<sample_document_text>. <question_1>: <expected_answer_1>

<sample_document_text>. <question_2>: <expected_answer_2>

…

<sample_document_text>. <question_n>: <expected_answer_n>

<true_document_text>. <Question>:

Code language: HTML, XML (xml)where <sample_document_text> indicates the prototype of an actual text.

While Few-Shots learning can be a very powerful tool and has been shown to have sometimes results comparable with fine-tuning approaches (see for example this paper), in our specific use case IT5 performed very poorly in the few-shot scenario.

Fine-tuning

In machine learning, fine-tuning is a transfer learning technique in which a pre-trained model is being trained on new data, and its weights, or part of them, are updated accordingly.

LLMs, also known as foundation models, are pre-trained on large amounts of data at scale in a self-supervised fashion, on generic tasks like the prediction of the next word in a sentence (GPT-like models) or the reconstruction of masked words in a sentence (BERT or T5). It’s precisely these generic pre-training tasks that allow zero- and few-shot learning behaviors to emerge.

Besides using in-context learning, which performs inference without requiring any training on new data, i.e. no update of the models’ parameters (or weights), another approach is to fine-tune LLMs to perform downstream tasks, using a new corpus of training data.

In this specific use case, differently from the few-shot approach, fine-tuning the IT5 model on our dataset led to very satisfactory results, with an increase of more than 70% in metrics for fixed model size and entity considered. The results were competitive with classical NER approaches.

| entity_1 | entity_2 | entity_3 | |

| it5-qa-small | 93% | 79% | 78% |

| it5-qa-base | 92% | 80% | 79% |

| it5-qa-large | 94% | 82% | 82% |

Deploying your own LLM on the Google Cloud Platform

Back in December 2022 Google had not yet launched its current GenerativeAI studio on Google Cloud Platform [Link], so we could not try to use its powerful text foundation model, PaLM 2. Proprietary models such as this are gigantic, with more than 500 billion parameters, and have incredible accuracies already in the zero- and few-shot scenario. Nonetheless, even today one might prefer to bring their own LLM to Google Cloud, be it for reuse of something developed elsewhere (e.g. with other cloud providers, or on premise) or to have some savings on the inference costs.

Deploying a Machine Learning (ML) model means making it available as a service that accepts incoming requests, which contain any new input data, and shares back the respective outputs.

In order to do that, the model is typically shipped, as a set of rest API endpoints, to an infrastructure that can serve http requests.

The AGBG AI team needed to make the fine-tuned IT5 model run in a GCP project, in order to integrate it with all other components of the Document Understanding pipeline architecture. There exist several feasible solutions to do so, focusing in particular on serveless approaches, we considered two possible strategies:

- Vertex AI: since the model is not written in pure TensorFlow, as it makes use of several classes and abstractions provided by libraries from HuggingFace, it must be containerized using Docker, uploaded Vertex AI Model Registry and exposed to a custom Vertex AI Endpoint.

- Cloud Run: the model is containerized using Docker, pushed to Container/Artifact Registry and deployed as a Cloud Run service.

Both ways provide for a great level of customization, with Vertex AI only forcing the format of requests and responses from its endpoints. However, since the Vertex endpoints machines cannot scale to zero when idle for a certain period of time, the first solution may lead to higher costs in case of sparse traffic. For this reason, after analyzing the costs based on the expected traffic for our documents stream, we decided to go fully custom with Cloud Run.

Conclusions

In summary, large language models represent a significant advancement in the realm of document understanding tasks. They demonstrate performance levels comparable to, and at times surpassing, traditional algorithms, particularly when dealing with limited amounts of data. This breakthrough has revolutionized the field of natural language processing, empowering developers to fully unlock the potential within their data. Through the utilization of Google Cloud’s immense capabilities, developers and data scientists can now harness the unparalleled efficiency and expressiveness offered by these extensive foundation models. This allows them to create cutting-edge applications in the domain of Natural Language Understanding. Google Cloud simplifies the development and deployment processes, offering a seamless and cost-effective solution. With advanced functionalities like enhanced semantic comprehension, precise text extraction, and efficient knowledge distillation, coming up with novel innovative applications only takes a fair deal of creativity – and obviously the availability of decent data sources, as always.

So, gather diverse datasets, experiment with novel approaches, and push the boundaries of what’s possible. The more you explore, the more you will uncover the untapped potential and the amazing possibilities that lie within Document Understanding with Large Language models.