The first half of the 20th century saw a real concentration of brilliant people, especially in the scientific field, who literally changed the world in which we live: think of Einstein, Heisenberg, and Schrödinger in the field of physics (and many others could be mentioned).

Science and technology have progressed enormously in all fields, and mass communication, television first, and computers later, ending the century with the Internet and the Web, have irreversibly changed the world, society, and all of us. Giving the title of the most influential scientist of the 20th century is therefore difficult and probably meaningless, but one of the personalities that stand out is undoubtedly that of Alan Turing.

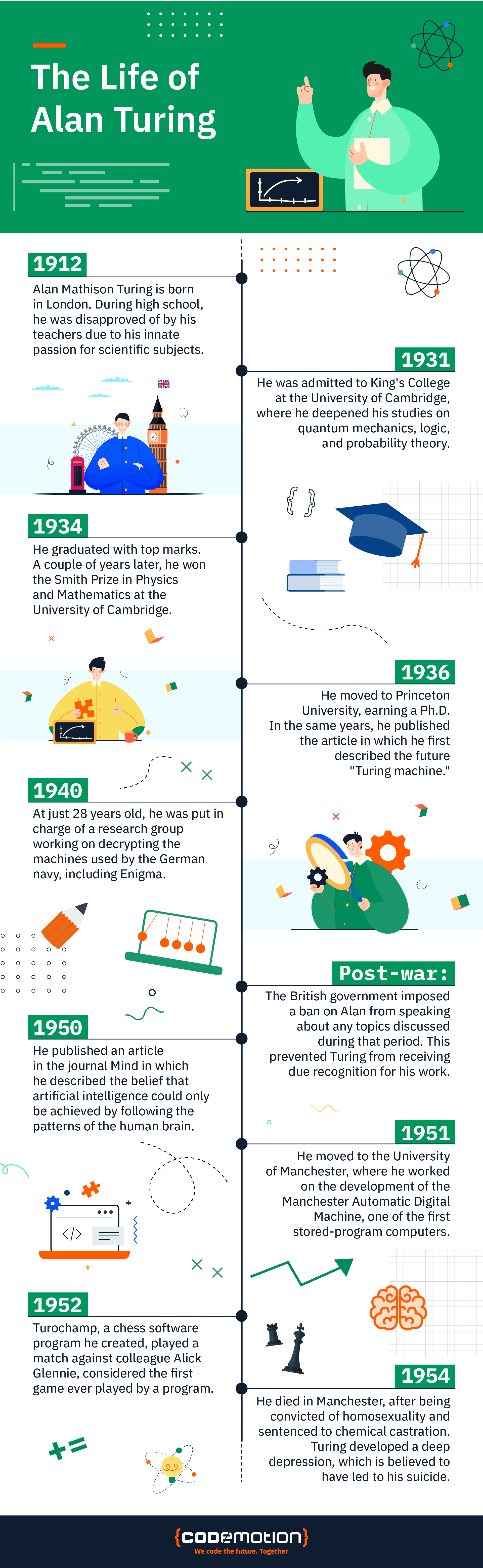

Let’s make a quick recap of Alan Turing’s life by taking a look in the infographic below.

The four reasons that make Alan Turing a special scientis

Turing lived his short existence in the first half of the 20th century: born in 1912 and died, probably by suicide in 1954, in his short life he made a contribution that other great figures could only give collectively. There are at least four reasons that make him one of the most important people of the 20th century: let’s list them.

The theory of computability

We humans have been counting since prehistoric times: the oldest artifact that bears traces of counting, a wolf bone found in Ishango in Africa, is 20,000 years old! We all learn to count today from most elementary schools, and then to do calculations, using formulas and algorithms.

The concept of an algorithm has been used for millennia: although the name derives from the great medieval Islamic scholar al-Khwarizmi, Romans, Greeks, Chinese, Indians, Babylonians, and all other civilizations have used algorithms. But to know exactly what an algorithm is, we have to wait until 1936!

In that year, the young Alan Turing published a scientific article with the enigmatic title, On Computable Numbers, with an application to the Entscheidungsproblem, in which he provided a mathematical definition of an algorithm, in terms of the famous Turing machines, which gave rise to the theory of computability, one of the theoretical pillars of computer science.

During the same period, the American Alonzo Church (every time you use a lambda in your program you are remembering him!) had defined an alternative symbolic formalism to the mathematical logic of the time, lambda-calculus: his students Kleene and Rosser showed that this formalism failed to provide a coherent formalization of the entire logic but, limiting itself to computable functions, managed to describe them as recursive functions.

Turing earned his doctorate at Princeton under the guidance of Church and published his article simultaneously and independently of Church: the hypothesis that the abstract notion of an algorithm is encoded by Turing machines or Church’s lambda-calculus is now called the Church-Turing thesis.

Cracking the Nazi codes

Turing’s mathematical career would have continued in the United States, in the prestigious Princeton, which welcomed many European geniuses from Einstein to Gödel to von Neumann, if the winds of war had not blown darkly over Europe: back in the United Kingdom, at 28 years old, Turing found himself head of the group of cryptographers who, in the secret laboratories of Bletchley Park, applied themselves to the deciphering of the codes produced by the Nazis with the Enigma machine, an ingenious cryptographic mechanism.

In Poland, mathematician Marian Rejewsky studied Enigma and built “anti-enigma” machines to try to decipher its messages. Based on that work, Alan Turing succeeded in simplifying the structure of the machines and making them more efficient, in deciphering the Nazi codes and contributing decisively to the victory of the Allies on the Mediterranean and Atlantic fronts.

In fact, it is with the Enigma encoding that the Nazi U-boats were informed of the targets to be hit. A job for which, due to state secrecy, he received no recognition: indeed, what Turing received from his own government, ten years after the end of the war, was homophobic persecution that was probably one of the causes of his death.

The invention of the computer

The question of the invention of the computer is as intricate as that of the invention of writing: there was no single inventor, but several groups of people working at the same time designed and built the first electromechanical machines with the structure and theoretical capacity of today’s computers.

On this side of the Atlantic, the credit goes to Turing. Firstly, having proposed an abstract machine model to define the concept of an algorithm and having shown that a Turing machine can be programmed to execute any algorithm (universal machine), the idea of physically creating such a machine arose naturally. Given the technology of the time, it was necessary to imagine how to create the machine’s memory, the circuits to execute its instructions and understand which instructions to equip it with.

In Liverpool and then in Manchester, Alan Turing designed two machines that today we would say had a RISC architecture: Turing’s idea was to include only essential hardware, implementing just a few basic instructions and then leaving it to the software to perform complex operations. This is an alternative approach to the one the Americans were pursuing in the United States under the guidance of John von Neumann, who had once offered Turing to stay in Princeton as his assistant – we can only imagine what they would have achieved together!

The structure of a computer that contains both data and the program that processes it in its memory is now known as a von Neumann machine, but ultimately, it is attributable to Turing’s projects and the idea of his famous abstract machine.

Artificial intelligence

In the last years of his life, Turing focused on exploring the limits and possibilities of the machines he had helped build, the first computers in history. His idea was that even humans, or rather their brains, are machines that process external stimuli using algorithms, and therefore the human mind is not unlike a Turing machine with a sophisticated universal program that enables it to learn and react to environmental stimuli.

Although the term artificial intelligence and its field of study would only emerge after 1956, Turing proposed his idea of a thinking machine in a 1948 report and developed some artificial intelligence concepts in 1950 and 1951. In particular, Alan Turing understood that computers could be programmed to translate language, answer questions in a human language, play chess, checkers, and more, and prove mathematical theorems.

Turing devised his famous test precisely with this type of linguistic ability in mind and went so far as to hypothesize that machines with billions of numbers inside them (ChatGPT has 180 billion, in fact) could sustain a conversation appearing human: the Turing test is more complex, consisting of having a computer play the part of a male in a conversation convincing the interlocutor that it is actually a woman.

Two ideas were clearly suggested by Turing but disregarded by his immediate successors, and it took the second AI winter for them to become established:

AI should not be built “already learned” but rather designed and built like a newborn, capable of learning on their own and from contact with the environment everything they need; elements of randomness must be introduced in AI programs to avoid the combinatorial explosion of navigating all possible cases and also because, in nature, evolution uses chance essentially in the natural selection process.

These two modern ideas are the basis of all the artificial intelligence we see around us today.